mirror of

https://github.com/netdata/netdata.git

synced 2025-04-06 14:35:32 +00:00

Windows installer + ML (all) improved (#20021)

* Deployment Guides Improved * Windows installer + ML (all) improved * fix formatting and some minor changes --------- Co-authored-by: Ilya Mashchenko <ilya@netdata.cloud>

This commit is contained in:

parent

543aa59782

commit

5a9b3ecadf

6 changed files with 234 additions and 204 deletions

|

|

@ -1,7 +1,19 @@

|

|||

# Machine Learning and Anomaly Detection

|

||||

|

||||

Netdata provides advanced Machine Learning features to help you identify and troubleshoot anomalies and unexpected behavior in your infrastructure before they become critical issues:

|

||||

Netdata includes advanced Machine Learning capabilities to help you detect and resolve anomalies in your infrastructure before they escalate into critical issues. These features provide real-time insights and proactive monitoring to improve system reliability.

|

||||

|

||||

- K-means clustering [Machine Learning models](/src/ml/README.md) are trained to power the [Anomaly Advisor](/docs/dashboards-and-charts/anomaly-advisor-tab.md) on the dashboard, which allows you to identify Anomalies in your infrastructure.

|

||||

- [Metric Correlations](/docs/metric-correlations.md) are possible through the dashboard using the [Two-sample Kolmogorov Smirnov](https://en.wikipedia.org/wiki/Kolmogorov%E2%80%93Smirnov_test#Two-sample_Kolmogorov%E2%80%93Smirnov_test) statistical test and Volume heuristic measures.

|

||||

- The [Netdata Assistant](/docs/netdata-assistant.md) is able to answer your prompts when it comes to troubleshooting Alerts and Anomalies.

|

||||

## Key Features

|

||||

|

||||

### Anomaly Detection with K-Means Clustering

|

||||

|

||||

Netdata trains K-means clustering models to detect anomalies in your infrastructure. These models power the [Anomaly Advisor](/docs/dashboards-and-charts/anomaly-advisor-tab.md), which visually highlights anomalies on the dashboard, allowing you to quickly identify and investigate unexpected behavior.

|

||||

|

||||

### Metric Correlations

|

||||

|

||||

Netdata enables metric correlation analysis through the dashboard. This feature uses the [Two-sample Kolmogorov-Smirnov test](https://en.wikipedia.org/wiki/Kolmogorov%E2%80%93Smirnov_test#Two-sample_Kolmogorov%E2%80%93Smirnov_test) and volume heuristic measures to help you understand relationships between different metrics and identify potential causes of anomalies.

|

||||

|

||||

### Netdata Assistant for Troubleshooting

|

||||

|

||||

The [Netdata Assistant](/docs/netdata-assistant.md) provides AI-driven assistance for troubleshooting alerts and anomalies. You can interact with it directly to get explanations, recommendations, and next steps based on detected anomalies and system behavior.

|

||||

|

||||

These Machine Learning features enhance observability and streamline incident response, helping you maintain system health with greater efficiency.

|

||||

|

|

@ -1,66 +1,66 @@

|

|||

# Metric Correlations

|

||||

|

||||

The Metric Correlations feature lets you quickly find metrics and charts related to a particular window of interest that you want to explore further.

|

||||

The Metric Correlations feature helps you quickly identify metrics and charts relevant to a specific time window of interest, allowing for faster root cause analysis.

|

||||

|

||||

By displaying the standard Netdata dashboard, filtered to show only charts that are relevant to the window of interest, you can get to the root cause sooner.

|

||||

By filtering the standard Netdata dashboard to display only the most relevant charts, Metric Correlations makes it easier to pinpoint anomalies and investigate issues.

|

||||

|

||||

Because Metric Correlations use every available metric from your infrastructure, with as high as 1-second granularity, you get the most accurate insights using every possible metric.

|

||||

Since it leverages every available metric in your infrastructure with up to 1-second granularity, Metric Correlations provides highly accurate insights.

|

||||

|

||||

## Using Metric Correlations

|

||||

|

||||

When viewing the [Metrics tab or a single-node dashboard](/docs/dashboards-and-charts/metrics-tab-and-single-node-tabs.md), the **Metric Correlations** button appears in the top right corner of the page.

|

||||

When viewing the [Metrics tab or a single-node dashboard](/docs/dashboards-and-charts/metrics-tab-and-single-node-tabs.md), you'll find the **Metric Correlations** button in the top-right corner.

|

||||

|

||||

To start correlating metrics, click the **Metric Correlations** button, [highlight a selection of metrics](/docs/dashboards-and-charts/netdata-charts.md#highlight) on a single chart. The selected timeframe needs at least 15 seconds for Metric Correlation to work.

|

||||

To start:

|

||||

|

||||

The menu then displays information about the selected area and reference baseline. Metric Correlations uses the reference baseline to discover which additional metrics are most closely connected to the selected metrics. The reference baseline is based upon the period immediately preceding the highlighted window and is the length of four times the highlighted window. This is to ensure that the reference baseline is always immediately before the highlighted window of interest and a bit longer to ensure it's a more representative short-term baseline.

|

||||

1. Click **Metric Correlations**.

|

||||

2. [Highlight a selection of metrics](/docs/dashboards-and-charts/netdata-charts.md#highlight) on a single chart. The selected timeframe must be at least 15 seconds.

|

||||

3. The menu displays details about the selected area and reference baseline. Metric Correlations compares the highlighted window to a reference baseline, which is four times its length and precedes it immediately.

|

||||

4. Click **Find Correlations**. This button is only active if a valid timeframe is selected.

|

||||

5. The process evaluates all available metrics and returns a filtered Netdata dashboard showing only the most changed metrics between the baseline and the highlighted window.

|

||||

6. If needed, select another window and press **Find Correlations** again to refine your analysis.

|

||||

|

||||

Click the **Find Correlations** button to begin the correlation process. This button is only active if a valid timeframe is selected. Once clicked, the process will evaluate all available metrics on your nodes and return a filtered version of the Netdata dashboard. You will now only see the metrics that changed the most between the base window and the highlighted window you selected.

|

||||

## Metric Correlations Options

|

||||

|

||||

These charts are fully interactive, and whenever possible, will only show the **dimensions** related to the timeline you selected.

|

||||

|

||||

If you find something else interesting in the results, you can select another window and press **Find Correlations** again to kick the process off again.

|

||||

|

||||

## Metric Correlations options

|

||||

|

||||

MC enables a few input parameters that users can define to iteratively explore their data in different ways. As is usually the case in Machine Learning (ML), there is no "one size fits all" algorithm, what approach works best will typically depend on the type of data (which can be very different from one metric to the next) and even the nature of the event or incident you might be exploring in Netdata.

|

||||

|

||||

So when you first run MC, it will use the most sensible and general defaults. But you can also then vary any of the below options to explore further.

|

||||

Metric Correlations offers adjustable parameters for deeper data exploration. Since different data types and incidents require different approaches, these settings allow for flexible analysis.

|

||||

|

||||

### Method

|

||||

|

||||

There are two algorithms available that aim to score metrics based on how much they’ve changed between the baseline and highlight windows.

|

||||

Two algorithms are available for scoring metrics based on changes between the baseline and highlight windows:

|

||||

|

||||

- `KS2` - A statistical test ([Two-sample Kolmogorov Smirnov](https://en.wikipedia.org/wiki/Kolmogorov%E2%80%93Smirnov_test#Two-sample_Kolmogorov%E2%80%93Smirnov_test)) comparing the distribution of the highlighted window to the baseline to try and quantify which metrics have most evidence of a significant change. You can explore our implementation [here](https://github.com/netdata/netdata/blob/d917f9831c0a1638ef4a56580f321eb6c9a88037/database/metric_correlations.c#L212).

|

||||

- `Volume` - A heuristic measure based on the percentage change in averages between a highlighted window and baseline, with various edge cases sensibly controlled for. You can explore our implementation [here](https://github.com/netdata/netdata/blob/d917f9831c0a1638ef4a56580f321eb6c9a88037/database/metric_correlations.c#L516).

|

||||

- **`KS2` (Kolmogorov-Smirnov Test)**: A statistical method comparing distributions between the highlighted and baseline windows to detect significant changes. [Implementation details](https://github.com/netdata/netdata/blob/d917f9831c0a1638ef4a56580f321eb6c9a88037/database/metric_correlations.c#L212).

|

||||

- **`Volume`**: A heuristic approach based on percentage change in averages, designed to handle edge cases. [Implementation details](https://github.com/netdata/netdata/blob/d917f9831c0a1638ef4a56580f321eb6c9a88037/database/metric_correlations.c#L516).

|

||||

|

||||

### Aggregation

|

||||

|

||||

Behind the scenes, Netdata will aggregate the raw data as needed such that arbitrary window lengths can be selected for MC. By default, Netdata will just `Average` raw data when needed as part of pre-processing. However, other aggregations like `Median`, `Min`, `Max`, `Stddev` are also possible.

|

||||

To accommodate different window lengths, Netdata aggregates raw data as needed. The default aggregation method is `Average`, but you can also choose `Median`, `Min`, `Max`, or `Stddev`.

|

||||

|

||||

### Data

|

||||

### Data Type

|

||||

|

||||

Unlike other observability agents that only collect raw metrics, Netdata also assigns an [Anomaly Bit](https://github.com/netdata/netdata/tree/master/src/ml#anomaly-bit) in real-time. This bit flags whether a metric is within normal ranges (0) or deviates significantly (1). This built-in anomaly detection allows for the analysis of both the raw data and the anomaly rates.

|

||||

Netdata assigns an [Anomaly Bit](https://github.com/netdata/netdata/tree/master/src/ml#anomaly-bit) to each metric in real-time, flagging whether it deviates significantly from normal behavior. You can analyze either raw data or anomaly rates:

|

||||

|

||||

**Note**: Read more [here](/src/ml/README.md) to learn more about the native anomaly detection features within netdata.

|

||||

|

||||

- `Metrics` - Run MC on the raw metric values.

|

||||

- `Anomaly Rate` - Run MC on the corresponding anomaly rate for each metric.

|

||||

- **`Metrics`**: Runs Metric Correlations on raw metric values.

|

||||

- **`Anomaly Rate`**: Runs Metric Correlations on anomaly rates for each metric.

|

||||

|

||||

## Metric Correlations on the Agent

|

||||

|

||||

Metric Correlations (MC) requests to Netdata Cloud are handled in two ways:

|

||||

|

||||

1. If MC is enabled on any node, the request routes to the highest-level node in the hierarchy (either a Parent node or the node itself)

|

||||

2. If MC is not enabled on any node, Netdata Cloud processes the request by collecting data from nodes and computing correlations in its backend

|

||||

1. If MC is enabled on any node, the request is routed to the highest-level node (a Parent node or the node itself).

|

||||

2. If MC is not enabled on any node, Netdata Cloud processes the request by collecting data from nodes and computing correlations on its backend.

|

||||

|

||||

## Usage tips

|

||||

## Usage Tips

|

||||

|

||||

- When running Metric Correlations from the [Metrics tab](/docs/dashboards-and-charts/metrics-tab-and-single-node-tabs.md) across multiple nodes, you might find better results if you iterate on the initial results by grouping by node to then filter to nodes of interest and rerun the Metric Correlations. So a typical workflow in this case would be to:

|

||||

- If unsure which nodes you’re interested in, then run MC on all nodes.

|

||||

- Within the initial results returned group the most interesting chart by node to see if the changes are across all nodes or a subset of nodes.

|

||||

- If you see a subset of nodes clearly jump out when you group by node, then filter for just those nodes of interest and run the MC again. This will result in less aggregation needing to be done by Netdata and so should help give clearer results as you interact with the slider.

|

||||

- Use the `Volume` algorithm for metrics with a lot of gaps (e.g., request latency when there are few requests), otherwise stick with `KS2`

|

||||

- By default, Netdata uses the `KS2` algorithm which is a tried and tested method for change detection in a lot of domains. The [Wikipedia](https://en.wikipedia.org/wiki/Kolmogorov%E2%80%93Smirnov_test) article gives a good overview of how this works. Basically, it is comparing, for each metric, its cumulative distribution in the highlight window with its cumulative distribution in the baseline window. The statistical test then seeks to quantify the extent to which we can say these two distributions look similar enough to be considered the same or not. The `Volume` algorithm is a bit more simple than `KS2` in that it basically compares (with some edge cases sensibly handled) the average value of the metric across baseline and highlight and looks at the percentage change. Often both `KS2` and `Volume` will have significant agreement and return similar metrics.

|

||||

- `Volume` might favor picking up more sparse metrics that were relatively flat and then came to life with some spikes (or vice versa). This is because for such metrics that just don't have that many different values in them, it is impossible to construct a cumulative distribution that can then be compared. So `Volume` might be useful in spotting examples of metrics turning on or off.

|

||||

- `KS2` since it relies on the full distribution might be better at highlighting more complex changes that `Volume` is unable to capture. For example, a change in the variation of a metric might be picked up easily by `KS2` but missed (or just much lower scored) by `Volume` since the averages might remain not all that different between baseline and highlight even if their variance has changed a lot.

|

||||

- Use `Volume` and `Anomaly Rate` together to ask what metrics have turned most anomalous from baseline to a highlighted window. You can expand the embedded anomaly rate chart once you have results to see this more clearly.

|

||||

- When running Metric Correlations from the [Metrics tab](/docs/dashboards-and-charts/metrics-tab-and-single-node-tabs.md) across multiple nodes, refine your results by grouping by node:

|

||||

1. Run MC on all nodes if you're unsure which ones are relevant.

|

||||

2. Group the most interesting charts by node to determine whether changes affect all nodes or just a subset.

|

||||

3. If a subset of nodes stands out, filter for those nodes and rerun MC to get more precise results.

|

||||

|

||||

- Choose the **`Volume`** algorithm for sparse metrics (e.g., request latency with few requests). Otherwise, use **`KS2`**.

|

||||

- **`KS2`** is ideal for detecting complex distribution changes, such as shifts in variance.

|

||||

- **`Volume`** is better for detecting metrics that were inactive and then spiked (or vice versa).

|

||||

|

||||

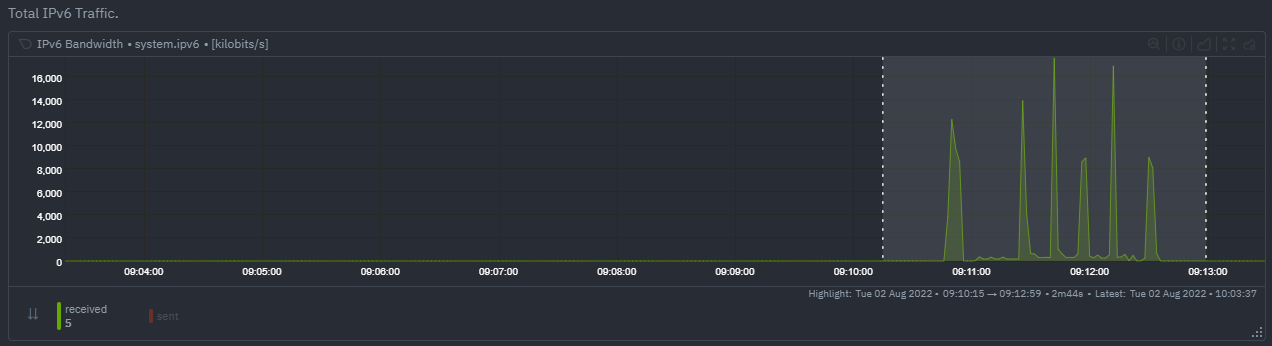

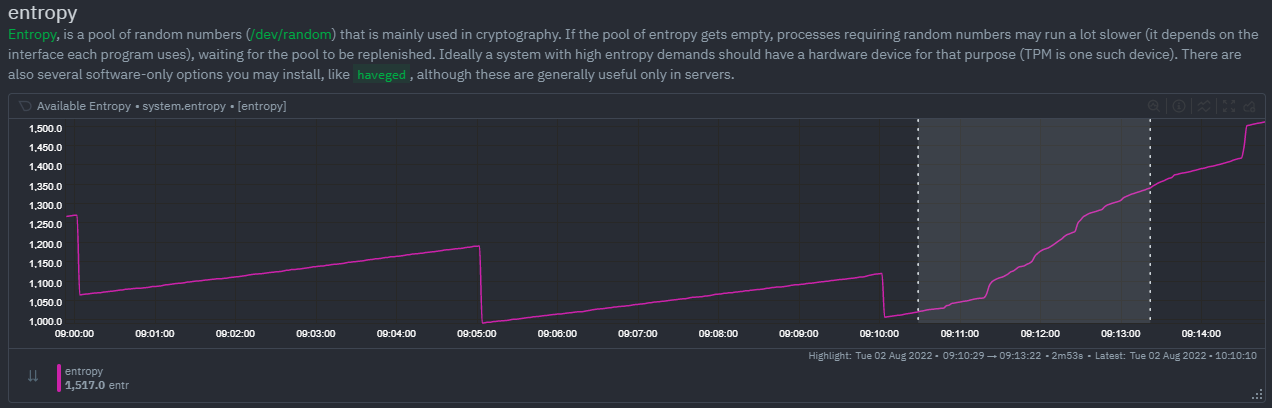

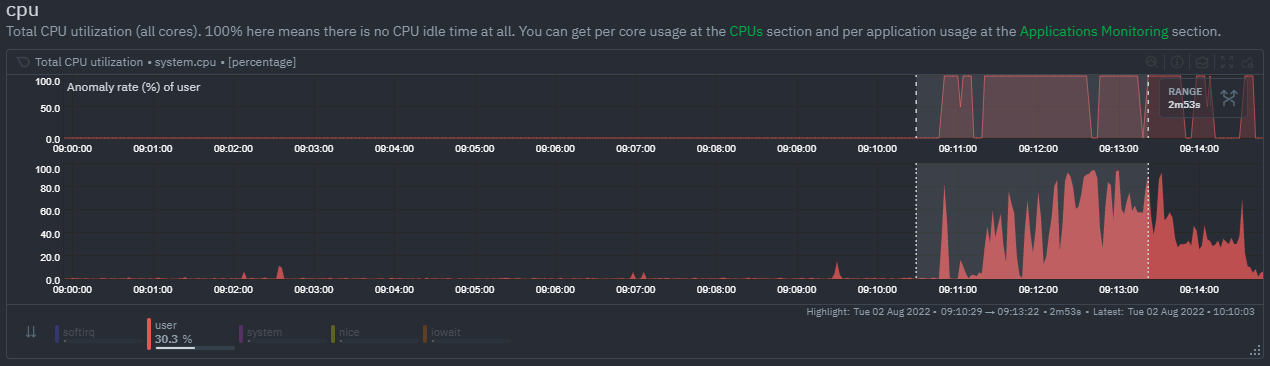

**Example:**

|

||||

- `Volume` can highlight network traffic suddenly turning on.

|

||||

- `KS2` can detect entropy distribution changes missed by `Volume`.

|

||||

|

||||

- Combine **`Volume`** and **`Anomaly Rate`** to identify the most anomalous metrics within a timeframe. Expand the anomaly rate chart to visualize results more clearly.

|

||||

|

|

@ -1,20 +1,20 @@

|

|||

# Alert troubleshooting with Netdata Assistant

|

||||

# Alert Troubleshooting with Netdata Assistant

|

||||

|

||||

The Netdata Assistant is a feature that uses large language models and the Netdata community's collective knowledge to guide you during troubleshooting. It is designed to make understanding and root causing alerts simpler and faster.

|

||||

The Netdata Assistant leverages large language models and community knowledge to simplify alert troubleshooting and root cause analysis.

|

||||

|

||||

## Using Netdata Assistant

|

||||

|

||||

- Navigate to the Alerts tab

|

||||

- If there are active alerts, the `Actions` column will have an Assistant button

|

||||

1. Navigate to the **Alerts** tab.

|

||||

2. If there are active alerts, the **Actions** column will have an **Assistant** button.

|

||||

|

||||

|

||||

|

||||

|

||||

- Clicking on the Assistant button opens up as a floating window with customized information and troubleshooting tips for this alert (note that the window can follow you through your troubleshooting journey on Netdata dashboards)

|

||||

3. Click the **Assistant** button to open a floating window with tailored troubleshooting insights.

|

||||

|

||||

|

||||

|

||||

|

||||

- If you need more information or want to dig deeper into an issue, Netdata Assistant will also provide you with helpful web links to resources that can help you.

|

||||

4. If you need more details, the Assistant provides useful resource links to help with further investigation.

|

||||

|

||||

|

||||

|

||||

|

||||

- If there are no active alerts, you can still use Netdata Assistant by clicking the Assistant button on the Alert Configuration view.

|

||||

5. If there are no active alerts, you can still access the Assistant from the **Alert Configuration** view.

|

||||

|

|

@ -1,64 +1,61 @@

|

|||

# Netdata Windows Installer

|

||||

# Windows Installer Guide

|

||||

|

||||

Netdata offers a convenient Windows installer for easy setup. This executable provides two distinct installation modes, outlined below.

|

||||

Netdata provides a straightforward Windows installer for easy setup. The installer offers two installation modes, each with specific features outlined below.

|

||||

|

||||

The Netdata Windows Agent is designed for users with paid Netdata subscriptions. If you are using a free (non-paid Netdata account), or no Netdata account at all, the Windows Agent will have restricted functionality. Specifically:

|

||||

**Important Note**: The Netdata Windows Agent is intended for users with paid Netdata subscriptions. If you're using a free account or no account at all, certain features of the Windows Agent will be restricted.

|

||||

|

||||

- **Standalone Agents**: The user interface within the Windows Agent will be locked, preventing access to monitoring data.

|

||||

- [**Child Agents**](/docs/observability-centralization-points/metrics-centralization-points/README.md): Even when a Windows Agent streams data to a Linux parent Netdata instance, the Windows Agent's monitoring data will be locked and inaccessible within the parent dashboard's user interface.

|

||||

**Key Limitations for Free Users**:

|

||||

|

||||

- **Standalone Agents**: The user interface will be locked, and you will not have access to monitoring data.

|

||||

- **Child Agents**: If the Windows Agent streams data to a Linux-based parent Netdata instance, you will be unable to view the Windows Agent’s monitoring data in the parent dashboard.

|

||||

|

||||

## Download the MSI Installer

|

||||

|

||||

You can download the Netdata Windows installer (MSI) from the official releases page:

|

||||

You can download the Netdata Windows installer (MSI) from the official releases page. Choose between the following versions:

|

||||

|

||||

| Version | Description |

|

||||

|--------------------------------------------------------------------------------------------------|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

|

||||

| [Stable](https://github.com/netdata/netdata/releases/latest/download/netdata-x64.msi) | This is the recommended version for most users as it provides the most reliable and well-tested features. |

|

||||

| [Nightly](https://github.com/netdata/netdata-nightlies/releases/latest/download/netdata-x64.msi) | Offers the latest features but may contain bugs or instabilities. Use this option if you require access to the newest features and are comfortable with potential issues. |

|

||||

| Version | Description |

|

||||

|--------------------------------------------------------------------------------------------------|---------------------------------------------------------------------------------------------------------------------------------------------|

|

||||

| [Stable](https://github.com/netdata/netdata/releases/latest/download/netdata-x64.msi) | Recommended for most users; offers the most reliable and well-tested features. |

|

||||

| [Nightly](https://github.com/netdata/netdata-nightlies/releases/latest/download/netdata-x64.msi) | Contains the latest features but may have bugs or instability. Choose this if you need the newest features and can handle potential issues. |

|

||||

|

||||

## Silent Mode (Command line)

|

||||

## Silent Installation (Command Line)

|

||||

|

||||

This section provides instructions for installing Netdata in silent mode, which is ideal for automated deployments.

|

||||

Silent mode allows for automated deployments without user interaction.

|

||||

|

||||

> **Info**

|

||||

>

|

||||

> Run the installer as admin to avoid the Windows prompt.

|

||||

>

|

||||

> Using silent mode implicitly accepts the terms of the [GPL-3](https://raw.githubusercontent.com/netdata/netdata/refs/heads/master/LICENSE) (Netdata Agent) and [NCUL1](https://app.netdata.cloud/LICENSE.txt) (Netdata Web Interface) licenses, skipping the display of agreements.

|

||||

> **Note**: Run the installer as an administrator to avoid prompts.

|

||||

|

||||

### Available Options

|

||||

By using silent mode, you implicitly agree to the terms of the [GPL-3](https://raw.githubusercontent.com/netdata/netdata/refs/heads/master/LICENSE) (Netdata Agent) and [NCUL1](https://app.netdata.cloud/LICENSE.txt) (Netdata Web Interface) licenses, and the agreements will not be displayed during installation.

|

||||

|

||||

| Option | Description |

|

||||

|--------------|--------------------------------------------------------------------------------------------------|

|

||||

| `/qn` | Enables silent mode installation. |

|

||||

| `/i` | Specifies the path to the MSI installer file. |

|

||||

| `INSECURE=1` | Forces insecure connections, bypassing hostname verification (use only if absolutely necessary). |

|

||||

| `TOKEN=` | Sets the Claim Token for your Netdata Cloud Space. |

|

||||

| `ROOMS=` | Comma-separated list of Room IDs where you want your node to appear. |

|

||||

| `PROXY=` | Sets the proxy server address if your network requires one. |

|

||||

### Installation Options

|

||||

|

||||

| Option | Description |

|

||||

|--------------|----------------------------------------------------------------------------------------|

|

||||

| `/qn` | Enables silent mode installation. |

|

||||

| `/i` | Specifies the path to the MSI installer file. |

|

||||

| `INSECURE=1` | Forces insecure connections, bypassing hostname verification. Use only when necessary. |

|

||||

| `TOKEN=` | Sets the Claim Token for your Netdata Cloud Space. |

|

||||

| `ROOMS=` | Comma-separated list of Room IDs where your node will appear. |

|

||||

| `PROXY=` | Specifies the proxy server address for networks requiring one. |

|

||||

|

||||

### Example Usage

|

||||

|

||||

To connect your Agent to your Cloud Space:

|

||||

To connect your Agent to your Cloud Space, use the following command:

|

||||

|

||||

```bash

|

||||

msiexec /qn /i netdata-x64.msi TOKEN="<YOUR_TOKEN>" ROOMS="<YOUR_ROOMS>"

|

||||

```

|

||||

|

||||

Where:

|

||||

Replace `<YOUR_TOKEN>` with your Netdata Cloud Space claim token and `<YOUR_ROOMS>` with your Room ID(s).

|

||||

|

||||

- `<YOUR_TOKEN>`: Your Space claim token from Netdata Cloud.

|

||||

- `<YOUR_ROOMS>`: Your Room ID(s) from Netdata Cloud.

|

||||

|

||||

This command downloads and installs Netdata in one step:

|

||||

You can also download and install Netdata in one step with the following command:

|

||||

|

||||

```powershell

|

||||

$ProgressPreference = 'SilentlyContinue'; Invoke-WebRequest https://github.com/netdata/netdata/releases/latest/download/netdata-x64.msi -OutFile "netdata-x64.msi"; msiexec /qn /i netdata-x64.msi TOKEN=<YOUR_TOKEN> ROOMS=<YOUR_ROOMS>

|

||||

```

|

||||

|

||||

## Graphical User Interface (GUI)

|

||||

## Graphical User Interface (GUI) Installation

|

||||

|

||||

1. **Double-click** the installer to begin the setup process.

|

||||

2. **Grant Administrator Privileges**: You'll need to provide administrator permissions to install the Netdata service.

|

||||

1. **Double-click** the MSI installer to begin the installation process.

|

||||

2. **Grant Administrator Privileges**: You will be prompted to provide administrator permissions to install the Netdata service.

|

||||

|

||||

Once installed, you can access your Netdata dashboard at `localhost:19999`.

|

||||

After installation, you can access your Netdata dashboard by opening your browser and going to `localhost:19999`.

|

||||

|

|

|

|||

123

src/ml/README.md

123

src/ml/README.md

|

|

@ -1,84 +1,64 @@

|

|||

# ML models and anomaly detection

|

||||

# Machine Learning Models and Anomaly Detection in Netdata

|

||||

|

||||

In observability, machine learning can be used to detect patterns and anomalies in large datasets, enabling users to identify potential issues before they become critical.

|

||||

## Overview

|

||||

|

||||

At Netdata through understanding what useful insights ML can provide, we created a tool that can improve troubleshooting, reduce mean time to resolution and in many cases prevent issues from escalating. That tool is called the [Anomaly Advisor](/docs/dashboards-and-charts/anomaly-advisor-tab.md), available at our [Netdata dashboard](/docs/dashboards-and-charts/README.md).

|

||||

Machine learning helps detect patterns and anomalies in large datasets, enabling early issue identification before they escalate.

|

||||

|

||||

At Netdata, we developed **Anomaly Advisor**, a tool designed to improve troubleshooting, reduce mean time to resolution, and prevent issues from escalating. You can access it through the [Netdata dashboard](/docs/dashboards-and-charts/README.md).

|

||||

|

||||

> **Note**

|

||||

>

|

||||

> If you want to learn how to configure ML on your nodes, check the [ML configuration documentation](/src/ml/ml-configuration.md).

|

||||

> To configure ML on your nodes, check the [ML configuration documentation](/src/ml/ml-configuration.md).

|

||||

|

||||

## Design principles

|

||||

---

|

||||

|

||||

The following are the high level design principles of Machine Learning in Netdata:

|

||||

## Design Principles

|

||||

|

||||

1. **Unsupervised**

|

||||

Netdata’s machine learning models follow these key principles:

|

||||

|

||||

Whatever the ML models can do, they should do it by themselves, without any help or assistance from users.

|

||||

| Principle | Description |

|

||||

|----------------------------|------------------------------------------------------------------------------------------------------------------------------------------|

|

||||

| **Unsupervised Learning** | Models operate independently without requiring user input. |

|

||||

| **Real-time Performance** | While ML impacts CPU usage, it won't compromise Netdata's high-fidelity, real-time monitoring. |

|

||||

| **Seamless Integration** | ML-based insights are fully embedded into Netdata's existing infrastructure monitoring and troubleshooting. |

|

||||

| **Assistance Over Alerts** | ML helps users investigate potential issues rather than triggering unnecessary alerts. It won't wake you up at 3 AM for minor anomalies. |

|

||||

|

||||

2. **Real-time**

|

||||

---

|

||||

|

||||

We understand that Machine Learning will have some impact on resource utilization, especially in CPU utilization, but it shouldn't prevent Netdata from being real-time and high-fidelity.

|

||||

## Types of Anomalies Detected

|

||||

|

||||

3. **Integrated**

|

||||

Netdata identifies several anomaly types:

|

||||

|

||||

Everything achieved with Machine Learning should be tightly integrated to the infrastructure exploration and troubleshooting practices we are used to.

|

||||

- **Point Anomalies**: Unusually high or low values compared to historical data.

|

||||

- **Contextual Anomalies**: Sequences of values that deviate from expected patterns.

|

||||

- **Collective Anomalies**: Multivariate anomalies where a combination of metrics appears off.

|

||||

- **Concept Drifts**: Gradual shifts leading to a new baseline.

|

||||

- **Change Points**: Sudden shifts resulting in a new normal state.

|

||||

|

||||

4. **Assist, Advice, Consult**

|

||||

---

|

||||

|

||||

If we can't be sure that a decision made by Machine Learning is 100% accurate, we should use this to assist and consult users in their journey.

|

||||

## How Netdata’s ML Models Work

|

||||

|

||||

In other words, we don't want to wake up someone at 3 AM, just because a model detected something.

|

||||

### Training & Detection

|

||||

|

||||

Some of the types of anomalies Netdata detects are:

|

||||

Once ML is enabled, Netdata trains an unsupervised model for each metric. By default, this model is a [k-means clustering](https://en.wikipedia.org/wiki/K-means_clustering) algorithm trained on the last 4 hours of data. Instead of just analyzing raw values, the model works with preprocessed feature vectors to improve detection accuracy.

|

||||

|

||||

1. **Point Anomalies** or **Strange Points**: Single points that represent very big or very small values, not seen before (in some statistical sense).

|

||||

2. **Contextual Anomalies** or **Strange Patterns**: Not strange points in their own, but unexpected sequences of points, given the history of the time-series.

|

||||

3. **Collective Anomalies** or **Strange Multivariate Patterns**: Neither strange points nor strange patterns, but in global sense something looks off.

|

||||

4. **Concept Drifts** or **Strange Trends**: A slow and steady drift to a new state.

|

||||

5. **Change Point Detection** or **Strange Step**: A shift occurred and gradually a new normal is established.

|

||||

|

||||

### Models

|

||||

|

||||

Once ML is enabled, Netdata will begin training a model for each dimension. By default this model is a [k-means clustering](https://en.wikipedia.org/wiki/K-means_clustering) model trained on the most recent 4 hours of data.

|

||||

|

||||

Rather than just using the most recent value of each raw metric, the model works on a preprocessed [feature vector](https://en.wikipedia.org/wiki/Feature_(machine_learning)#:~:text=edges%20and%20objects.-,Feature%20vectors,-%5Bedit%5D) of recent smoothed values.

|

||||

|

||||

This enables the model to detect a wider range of potentially anomalous patterns in recent observations as opposed to just point-anomalies like big spikes or drops.

|

||||

|

||||

Unsupervised models have some noise, random false positives. To remove this noise, Netdata trains multiple machine learning models for each time-series, covering more than the last 2 days in total.

|

||||

|

||||

Netdata uses all of its available ML models to detect anomalies. So, all machine learning models of a time-series need to agree that a collected sample is an outlier, for it to be marked as an anomaly.

|

||||

|

||||

This process removes 99% of the false positives, offering reliable unsupervised anomaly detection.

|

||||

|

||||

The sections below will introduce you to the main concepts.

|

||||

To reduce false positives, Netdata trains multiple models per time-series, covering over two days of data. An anomaly is flagged only if **all** models agree on it, eliminating 99% of false positives.

|

||||

|

||||

### Anomaly Bit

|

||||

|

||||

Once each model is trained, Netdata will begin producing an **anomaly score** at each time step for each dimension. It **represents a distance measure** to the centers of the model's trained clusters (by default each model has k=2, so two clusters exist for every model).

|

||||

Each trained model assigns an **anomaly score** at every time step based on how far the data deviates from learned clusters. If the score exceeds the 99th percentile of training data, the **anomaly bit** is set to `true` (100); otherwise, it remains `false` (0).

|

||||

|

||||

Anomalous data should have bigger distance from the cluster centers than points of data that are considered normal. If the anomaly score is sufficiently large, it is a sign that the recent raw values of the dimension could potentially be anomalous.

|

||||

**Key benefits:**

|

||||

|

||||

By default, the threshold is that the anomalous data's distance from the center of the cluster should be greater than the 99th percentile distance of the data used in training.

|

||||

|

||||

Once this threshold is passed, the anomaly bit corresponding to that dimension is set to `true` to flag it as anomalous, otherwise it would be left as `false` to signal normal data.

|

||||

|

||||

#### How the anomaly bit is used

|

||||

|

||||

In addition to the raw value of each metric, Netdata also stores the anomaly bit **that is either 100 (anomalous) or 0 (normal)**.

|

||||

|

||||

More importantly, this is achieved without additional storage overhead as this bit is embedded into the custom floating point number the Netdata database uses, so it does not introduce any overheads in memory or disk footprint.

|

||||

|

||||

The query engine of Netdata uses this bit to compute anomaly rates while it executes normal time-series queries. This eliminates to need for additional queries for anomaly rates, as all `/api/v2` time-series query include anomaly rate information.

|

||||

- No additional storage overhead since the anomaly bit is embedded in Netdata’s floating point number format.

|

||||

- The query engine automatically computes anomaly rates without requiring extra queries.

|

||||

|

||||

### Anomaly Rate

|

||||

|

||||

Once all models have been trained, we can think of the Netdata dashboard as a big matrix/table of 0 and 100 values. If we consider this anomaly bit based representation of the state of the node, we can now detect overall node level anomalies.

|

||||

Netdata calculates **Node Anomaly Rate (NAR)** and **Dimension Anomaly Rate (DAR)** based on anomaly bits. Here’s an example matrix:

|

||||

|

||||

This figure illustrates the main idea (the x axis represents dimensions and the y axis time):

|

||||

|

||||

| | d1 | d2 | d3 | d4 | d5 | **NAR** |

|

||||

| Time | d1 | d2 | d3 | d4 | d5 | **NAR** |

|

||||

|---------|---------|---------|---------|---------|---------|-----------------------|

|

||||

| t1 | 0 | 0 | 0 | 0 | 0 | **0%** |

|

||||

| t2 | 0 | 0 | 0 | 0 | 100 | **20%** |

|

||||

|

|

@ -92,30 +72,33 @@ This figure illustrates the main idea (the x axis represents dimensions and the

|

|||

| t10 | 0 | 0 | 0 | 0 | 0 | **0%** |

|

||||

| **DAR** | **10%** | **30%** | **20%** | **20%** | **30%** | **_NAR_t1-10 = 22%_** |

|

||||

|

||||

- DAR = Dimension Anomaly Rate

|

||||

- NAR = Node Anomaly Rate

|

||||

- NAR_t1-t10 = Node Anomaly Rate over t1 to t10

|

||||

- **DAR (Dimension Anomaly Rate):** Average anomalies for a specific metric over time.

|

||||

- **NAR (Node Anomaly Rate):** Average anomalies across all metrics at a given time.

|

||||

- **Overall anomaly rate:** Computed across the entire dataset for deeper insights.

|

||||

|

||||

To calculate an anomaly rate, we can take the average of a row or a column in any direction.

|

||||

### Node-Level Anomaly Detection

|

||||

|

||||

For example, if we were to average along one row then this would be the Node Anomaly Rate, NAR (for all dimensions) at time `t`.

|

||||

Netdata tracks the percentage of anomaly bits over time. When the **Node Anomaly Rate (NAR)** exceeds a set threshold and remains high for a period, a **node anomaly event** is triggered. These events are recorded in the `new_anomaly_event` dimension on the `anomaly_detection.anomaly_detection` chart.

|

||||

|

||||

Likewise if we averaged a column then we would have the dimension anomaly rate for each dimension over the time window `t = 1-10`. Extending this idea, we can work out an overall anomaly rate for the whole matrix or any subset of it we might be interested in.

|

||||

---

|

||||

|

||||

### Anomaly detector, node level anomaly events

|

||||

## Viewing Anomaly Data in Netdata

|

||||

|

||||

An anomaly detector looks at all the anomaly bits of a node. Netdata's anomaly detector produces an anomaly event when the percentage of anomaly bits is high enough for a persistent amount of time.

|

||||

Once ML is enabled, Netdata provides an **Anomaly Detection** menu with key charts:

|

||||

|

||||

This anomaly event signals that there was sufficient evidence among all the anomaly bits that some strange behavior might have been detected in a more global sense across the node.

|

||||

- **`anomaly_detection.dimensions`**: Number of dimensions flagged as anomalous.

|

||||

- **`anomaly_detection.anomaly_rate`**: Percentage of anomalous dimensions.

|

||||

- **`anomaly_detection.anomaly_detection`**: Flags (0 or 1) indicating when an anomaly event occurs.

|

||||

|

||||

Essentially if the Node Anomaly Rate (NAR) passes a defined threshold and stays above that threshold for a persistent amount of time, a node anomaly event will be triggered.

|

||||

These insights help you quickly assess potential issues and take action before they escalate.

|

||||

|

||||

These anomaly events are currently exposed via the `new_anomaly_event` dimension on the `anomaly_detection.anomaly_detection` chart.

|

||||

---

|

||||

|

||||

## Charts

|

||||

## Summary

|

||||

|

||||

Once enabled, the "Anomaly Detection" menu and charts will be available on the dashboard.

|

||||

Netdata’s machine learning models provide reliable, real-time anomaly detection with minimal false positives. By embedding ML within existing observability workflows, Netdata enhances troubleshooting and ensures proactive monitoring without unnecessary alerts.

|

||||

|

||||

- `anomaly_detection.dimensions`: Total count of dimensions considered anomalous or normal.

|

||||

- `anomaly_detection.anomaly_rate`: Percentage of anomalous dimensions.

|

||||

- `anomaly_detection.anomaly_detection`: Flags (0 or 1) to show when an anomaly event has been triggered by the detector.

|

||||

For more details, check out:

|

||||

|

||||

- [Anomaly Advisor](/docs/dashboards-and-charts/anomaly-advisor-tab.md)

|

||||

- [ML Configuration Guide](/src/ml/ml-configuration.md)

|

||||

|

|

@ -1,14 +1,21 @@

|

|||

# ML Configuration

|

||||

|

||||

Netdata's [Machine Learning](/src/ml/README.md) capabilities are enabled by default if the [Database mode](/src/database/README.md) is set to `db = dbengine`

|

||||

Netdata's [Machine Learning](/src/ml/README.md) capabilities are enabled by default if the [Database mode](/src/database/README.md) is set to `db = dbengine`.

|

||||

|

||||

## Enabling or Disabling Machine Learning

|

||||

|

||||

To enable or disable Machine Learning capabilities on a node:

|

||||

|

||||

1. [Edit `netdata.conf`](/docs/netdata-agent/configuration/README.md#edit-a-configuration-file-using-edit-config)

|

||||

2. In the `[ml]` section, set `enabled` to `yes` to enable ML, `no` to disable it, or leave it at the default `auto` to enable ML only when [Database mode](/src/database/README.md) is set to `dbengine`

|

||||

3. [Restart Netdata](/docs/netdata-agent/start-stop-restart.md)

|

||||

1. [Edit `netdata.conf`](/docs/netdata-agent/configuration/README.md#edit-a-configuration-file-using-edit-config).

|

||||

2. In the `[ml]` section:

|

||||

- Set `enabled` to `yes` to enable ML.

|

||||

- Set `enabled` to `no` to disable ML.

|

||||

- Leave it at the default `auto` to enable ML only when [Database mode](/src/database/README.md) is set to `dbengine`.

|

||||

3. [Restart Netdata](/docs/netdata-agent/start-stop-restart.md).

|

||||

|

||||

Below is a list of all the available configuration params and their default values.

|

||||

## Available Configuration Parameters

|

||||

|

||||

Below is a list of all available configuration parameters and their default values:

|

||||

|

||||

```bash

|

||||

[ml]

|

||||

|

|

@ -36,70 +43,101 @@ Below is a list of all the available configuration params and their default valu

|

|||

|

||||

## Configuration Examples

|

||||

|

||||

If you would like to run ML on a parent instead of at the edge, some configuration options are illustrated below.

|

||||

If you want to run ML on a parent instead of at the edge, the examples below illustrate various configurations.

|

||||

|

||||

This example assumes 3 child nodes [streaming](/docs/observability-centralization-points/metrics-centralization-points/README.md) to 1 parent node and illustrates the main ways you might want to configure running ML for the children on the parent, running ML on the children themselves, or even a mix of approaches.

|

||||

This example assumes three child nodes [streaming](/docs/observability-centralization-points/metrics-centralization-points/README.md) to one parent node. It shows different ways to configure ML:

|

||||

|

||||

- Running ML on the parent for some or all children.

|

||||

- Running ML on the children themselves.

|

||||

- A mixed approach.

|

||||

|

||||

```mermaid

|

||||

flowchart BT

|

||||

C1["Netdata Child 0

|

||||

ML enabled"]

|

||||

C2["Netdata Child 1

|

||||

ML enabled"]

|

||||

C3["Netdata Child 2

|

||||

ML disabled"]

|

||||

P1["Netdata Parent

|

||||

(ML enabled for itself and Child 1 & 2)"]

|

||||

C1["Netdata Child 0\nML enabled"]

|

||||

C2["Netdata Child 1\nML enabled"]

|

||||

C3["Netdata Child 2\nML disabled"]

|

||||

P1["Netdata Parent\n(ML enabled for itself and Child 1 & 2)"]

|

||||

C1 --> P1

|

||||

C2 --> P1

|

||||

C3 --> P1

|

||||

```

|

||||

|

||||

```text

|

||||

# parent will run ML for itself and child 1,2, it will skip running ML for child 0.

|

||||

# child 0 will run its own ML at the edge.

|

||||

# child 1 will run its own ML at the edge, even though parent will also run ML for it, a bit wasteful potentially to run ML in both places but is possible (Netdata Cloud will essentially average any overlapping models).

|

||||

# child 2 will not run ML at the edge, it will be run in the parent only.

|

||||

# Parent will run ML for itself and Child 1 & 2, but skip Child 0.

|

||||

# Child 0 and Child 1 will run ML independently.

|

||||

# Child 2 will rely on the parent for ML and will not run it itself.

|

||||

|

||||

# parent-ml-enabled

|

||||

# run ML on all hosts apart from child-ml-enabled

|

||||

# Parent configuration

|

||||

[ml]

|

||||

enabled = yes

|

||||

hosts to skip from training = child-0-ml-enabled

|

||||

|

||||

# child-0-ml-enabled

|

||||

# run ML on child-0-ml-enabled

|

||||

# Child 0 configuration

|

||||

[ml]

|

||||

enabled = yes

|

||||

|

||||

# child-1-ml-enabled

|

||||

# run ML on child-1-ml-enabled

|

||||

# Child 1 configuration

|

||||

[ml]

|

||||

enabled = yes

|

||||

|

||||

# child-2-ml-disabled

|

||||

# do not run ML on child-2-ml-disabled

|

||||

# Child 2 configuration

|

||||

[ml]

|

||||

enabled = no

|

||||

```

|

||||

|

||||

## Descriptions (min/max)

|

||||

## Parameter Descriptions (Min/Max Values)

|

||||

|

||||

- `enabled`: `yes` to enable, `no` to disable, or `auto` to let Netdata decide when to enable ML.

|

||||

- `maximum num samples to train`: (`3600`/`86400`) This is the maximum amount of time you would like to train each model on. For example, the default of `21600` trains on the preceding 6 hours of data, assuming an `update every` of 1 second.

|

||||

- `minimum num samples to train`: (`900`/`21600`) This is the minimum amount of data required to be able to train a model. For example, the default of `900` implies that once at least 15 minutes of data is available for training, a model is trained, otherwise it is skipped and checked again at the next training run.

|

||||

- `train every`: (`3h`/`6h`) This is how often each model will be retrained. For example, the default of `3h` means that each model is retrained every 3 hours. Note: The training of all models is spread out across the `train every` period for efficiency, so in reality, it means that each model will be trained in a staggered manner within each `train every` period.

|

||||

- `number of models per dimension`: (`1`/`168`) This is the number of trained models that will be used for scoring. For example the default `number of models per dimension = 18` means that the most recently trained 18 models for the dimension will be used to determine the corresponding anomaly bit. This means that under default settings of `maximum num samples to train = 21600`, `train every = 3h` and `number of models per dimension = 18`, netdata will store and use the last 18 trained models for each dimension when determining the anomaly bit. This means that for the latest feature vector in this configuration to be considered anomalous it would need to look anomalous across _all_ the models trained for that dimension in the last 18*(10800/3600) ~= 54 hours. As such, increasing `number of models per dimension` may reduce some false positives since it will result in more models (covering a wider time frame of training) being used during scoring.

|

||||

- `dbengine anomaly rate every`: (`30`/`900`) This is how often netdata will aggregate all the anomaly bits into a single chart (`anomaly_detection.anomaly_rates`). The aggregation into a single chart allows enabling anomaly rate ranking over _all_ metrics with one API call as opposed to a call per chart.

|

||||

- `num samples to diff`: (`0`/`1`) This is a `0` or `1` to determine if you want the model to operate on differences of the raw data or just the raw data. For example, the default of `1` means that we take differences of the raw values. Using differences is more general and works on dimensions that might naturally tend to have some trends or cycles in them that is normal behavior to which we don't want to be too sensitive.

|

||||

- `num samples to smooth`: (`0`/`5`) This is a small integer that controls the amount of smoothing applied as part of the feature processing used by the model. For example, the default of `3` means that the rolling average of the last 3 values is used. Smoothing like this helps the model be a little more robust to spiky types of dimensions that naturally "jump" up or down as part of their normal behavior.

|

||||

- `num samples to lag`: (`0`/`5`) This is a small integer that determines how many lagged values of the dimension to include in the feature vector. For example, the default of `5` means that in addition to the most recent (by default, differenced and smoothed) value of the dimension, the feature vector will also include the 5 previous values too. Using lagged values in our feature representation allows the model to work over strange patterns over recent values of a dimension as opposed to just focusing on if the most recent value itself is big or small enough to be anomalous.

|

||||

- `random sampling ratio`: (`0.2`/`1.0`) This parameter determines how much of the available training data is randomly sampled when training a model. The default of `0.2` means that Netdata will train on a random 20% of training data. This parameter influences cost efficiency. At `0.2` the model is still reasonably trained while minimizing system overhead costs caused by the training.

|

||||

- `maximum number of k-means iterations`: This is a parameter that can be passed to the model to limit the number of iterations in training the k-means model. Vast majority of cases can ignore and leave as default.

|

||||

- `dimension anomaly score threshold`: (`0.01`/`5.00`) This is the threshold at which an individual dimension at a specific timestep is considered anomalous or not. For example, the default of `0.99` means that a dimension with an anomaly score of 99% or higher is flagged as anomalous. This is a normalized probability based on the training data, so the default of 99% means that anything that is as strange (based on distance measure) or more strange as the most strange 1% of data observed during training will be flagged as anomalous. If you wanted to make the anomaly detection on individual dimensions more sensitive you could try a value like `0.90` (90%) or to make it less sensitive you could try `1.5` (150%).

|

||||

- `host anomaly rate threshold`: (`0.1`/`10.0`) This is the percentage of dimensions (based on all those enabled for anomaly detection) that need to be considered anomalous at specific timestep for the host itself to be considered anomalous. For example, the default value of `1.0` means that if more than 1% of dimensions are anomalous at the same time then the host itself is considered in an anomalous state.

|

||||

- `anomaly detection grouping method`: The grouping method used when calculating node level anomaly rate.

|

||||

- `anomaly detection grouping duration`: (`1m`/`15m`) The duration across which to calculate the node level anomaly rate, the default of `900` means that the node level anomaly rate is calculated across a rolling 5 minute window.

|

||||

- `hosts to skip from training`: This parameter allows you to turn off anomaly detection for any child hosts on a parent host by defining those you would like to skip from training here. For example, a value like `dev-*` skips all hosts on a parent that begin with the "dev-" prefix. The default value of `!*` means "don't skip any".

|

||||

- `charts to skip from training`: This parameter allows you to exclude certain charts from anomaly detection. By default, only netdata related charts are excluded. This is to avoid the scenario where accessing the netdata dashboard could itself trigger some anomalies if you don't access them regularly. If you want to include charts that are excluded by default, add them in small groups and then measure any impact on performance before adding additional ones. Example: If you want to include system, apps, and user charts:`!system.* !apps.* !user.* *`.

|

||||

- `delete models older than`: (`1d`/`7d`) Delete old models from the database that are unused, by default models will be deleted after 7 days.

|

||||

### General Settings

|

||||

|

||||

- **`enabled`**: Controls whether ML is enabled.

|

||||

- `yes` to enable.

|

||||

- `no` to disable.

|

||||

- `auto` lets Netdata decide based on database mode.

|

||||

|

||||

- **`maximum num samples to train`** (`3600` - `86400`): Defines the maximum training period. The default of `21600` trains on the last 6 hours of data.

|

||||

|

||||

- **`minimum num samples to train`** (`900` - `21600`): The minimum amount of data needed to train a model. If less than `900` samples (15 minutes of data) are available, training is skipped.

|

||||

|

||||

- **`train every`** (`3h` - `6h`): Determines how often models are retrained. The default of `3h` means retraining occurs every three hours. Training is staggered to distribute system load.

|

||||

|

||||

### Model Behavior

|

||||

|

||||

- **`number of models per dimension`** (`1` - `168`): Specifies how many trained models per dimension are used for anomaly detection. The default of `18` means models trained over the last ~54 hours are considered.

|

||||

|

||||

- **`dbengine anomaly rate every`** (`30` - `900`): Defines how frequently Netdata aggregates anomaly bits into a single chart.

|

||||

|

||||

### Feature Processing

|

||||

|

||||

- **`num samples to diff`** (`0` - `1`): Determines whether ML operates on raw data (`0`) or differences (`1`). Using differences helps detect anomalies in cyclical patterns.

|

||||

|

||||

- **`num samples to smooth`** (`0` - `5`): Controls data smoothing. The default of `3` averages the last three values to reduce noise.

|

||||

|

||||

- **`num samples to lag`** (`0` - `5`): Defines how many past values are included in the feature vector. The default `5` helps the model detect patterns over time.

|

||||

|

||||

### Training Efficiency

|

||||

|

||||

- **`random sampling ratio`** (`0.2` - `1.0`): Controls the fraction of data used for training. The default `0.2` means 20% of available data is used, reducing system load.

|

||||

|

||||

- **`maximum number of k-means iterations`**: Limits iterations during k-means clustering (leave at default in most cases).

|

||||

|

||||

### Anomaly Detection Sensitivity

|

||||

|

||||

- **`dimension anomaly score threshold`** (`0.01` - `5.00`): Sets the threshold for flagging an anomaly. The default `0.99` flags values that are in the top 1% of anomalies based on training data.

|

||||

|

||||

- **`host anomaly rate threshold`** (`0.1` - `10.0`): Defines the percentage of dimensions that must be anomalous for the host to be considered anomalous. The default `1.0` means more than 1% must be anomalous.

|

||||

|

||||

### Anomaly Detection Grouping

|

||||

|

||||

- **`anomaly detection grouping method`**: Defines the method used to calculate the node-level anomaly rate.

|

||||

|

||||

- **`anomaly detection grouping duration`** (`1m` - `15m`): Determines the time window for calculating anomaly rates. The default `5m` calculates anomalies over a 5-minute rolling window.

|

||||

|

||||

### Skipping Hosts and Charts

|

||||

|

||||

- **`hosts to skip from training`**: Allows excluding specific child hosts from training. The default `!*` means no hosts are skipped.

|

||||

|

||||

- **`charts to skip from training`**: Excludes charts from anomaly detection. By default, Netdata-related charts are excluded to prevent false anomalies caused by normal dashboard activity.

|

||||

|

||||

### Model Retention

|

||||

|

||||

- **`delete models older than`** (`1d` - `7d`): Defines how long old models are stored. The default `7d` removes unused models after seven days.

|

||||

Loading…

Add table

Reference in a new issue