mirror of

https://github.com/netdata/netdata.git

synced 2025-04-13 17:19:11 +00:00

Reorg learn 0227 (#14621)

* reorg batch 1 * remove duplicate cloud custom dashboard and agent dashboard * Simplify the root web/README * Merge streaming references * Make enable streaming the overall intro and the README the reference * Remove reference-streaming document * Update overview pages

This commit is contained in:

parent

9fabe2340f

commit

8c73c47645

23 changed files with 629 additions and 2327 deletions

docs

category-overview-pages

concepts-overview.mddevelopers-overview.mdoperations-overview.mdtroubleshooting-overview.mdvisualizations-overview.md

contributing

dashboard

glossary.mdguidelines.mdguides/troubleshoot

metrics-storage-management

quickstart

visualize

exporting

health

packaging/installer

streaming

web

|

|

@ -1,10 +0,0 @@

|

|||

<!--

|

||||

title: "Concepts"

|

||||

sidebar_label: "Concepts"

|

||||

custom_edit_url: "https://github.com/netdata/netdata/blob/master/docs/category-overview-pages/concepts-overview.md"

|

||||

learn_status: "Published"

|

||||

learn_rel_path: "Concepts"

|

||||

sidebar_position: 10

|

||||

-->

|

||||

|

||||

This category will help you understand how key features and components work in Netdata.

|

||||

|

|

@ -1,10 +0,0 @@

|

|||

<!--

|

||||

title: "Developers"

|

||||

sidebar_label: "Developers"

|

||||

custom_edit_url: "https://github.com/netdata/netdata/blob/master/docs/category-overview-pages/developers-overview.md"

|

||||

learn_status: "Published"

|

||||

learn_rel_path: "Developers"

|

||||

sidebar_position: 100

|

||||

-->

|

||||

|

||||

In this category you will find information that will aid you while developing with Netdata.

|

||||

|

|

@ -1,10 +0,0 @@

|

|||

<!--

|

||||

title: "Operations"

|

||||

sidebar_label: "Operations"

|

||||

custom_edit_url: "https://github.com/netdata/netdata/blob/master/docs/category-overview-pages/operations-overview.md"

|

||||

learn_status: "Published"

|

||||

learn_rel_path: "Operations"

|

||||

sidebar_position: 40

|

||||

-->

|

||||

|

||||

In this category you will find all the instructions on "operations" you can perform with Netdata, whether that would be using the Anomaly Advisor to surface any potential unexpected behavior, or how to interact with the charts etc.

|

||||

5

docs/category-overview-pages/troubleshooting-overview.md

Normal file

5

docs/category-overview-pages/troubleshooting-overview.md

Normal file

|

|

@ -0,0 +1,5 @@

|

|||

# Troubleshooting and machine learning

|

||||

|

||||

In this section you can learn about Netdata's advanced tools that can assist you in troubleshooting issues with

|

||||

your infrastructure, to facilitate the identification of a root cause.

|

||||

|

||||

4

docs/category-overview-pages/visualizations-overview.md

Normal file

4

docs/category-overview-pages/visualizations-overview.md

Normal file

|

|

@ -0,0 +1,4 @@

|

|||

# Visualizations, charts and dashboards

|

||||

|

||||

In this section you can learn about the various ways Netdata visualizes the collected metrics at an infrastructure level with Netdata Cloud

|

||||

and at a single node level, with the Netdata Agent Dashboard.

|

||||

|

|

@ -1,13 +1,3 @@

|

|||

<!--

|

||||

title: "Netdata style guide"

|

||||

description: "The Netdata style guide establishes editorial guidelines for all of Netdata's writing, including documentation, blog posts, in-product UX copy, and more."

|

||||

custom_edit_url: https://github.com/netdata/netdata/edit/master/docs/contributing/style-guide.md

|

||||

sidebar_label: "Netdata style guide"

|

||||

learn_status: "Published"

|

||||

learn_topic_type: "References"

|

||||

learn_rel_path: "Contribute"

|

||||

-->

|

||||

|

||||

# Netdata style guide

|

||||

|

||||

The _Netdata style guide_ establishes editorial guidelines for any writing produced by the Netdata team or the Netdata

|

||||

|

|

@ -357,34 +347,6 @@ The Netdata team uses [`remark-lint`](https://github.com/remarkjs/remark-lint) f

|

|||

If you want to see all the settings, open the

|

||||

[`remarkrc.js`](https://github.com/netdata/netdata/blob/master/.remarkrc.js) file in the `netdata/netdata` repository.

|

||||

|

||||

### Frontmatter

|

||||

|

||||

Every document must begin with frontmatter, followed by an H1 (`#`) heading.

|

||||

|

||||

Unlike typical Markdown frontmatter, Netdata uses HTML comments (`<!--`, `-->`) to begin and end the frontmatter block.

|

||||

These HTML comments are later converted into typical frontmatter syntax when building [Netdata

|

||||

Learn](https://learn.netdata.cloud).

|

||||

|

||||

Frontmatter _must_ contain the following variables:

|

||||

|

||||

- A `title` that quickly and distinctly describes the document's content.

|

||||

- A `description` that elaborates on the purpose or goal of the document using no less than 100 characters and no more

|

||||

than 155 characters.

|

||||

- A `custom_edit_url` that links directly to the GitHub URL where another user could suggest additional changes to the

|

||||

published document.

|

||||

|

||||

Some documents, like the Ansible guide and others in the `/docs/guides` folder, require an `image` variable as well. In

|

||||

this case, replace `/docs` with `/img/seo`, and then rebuild the remainder of the path to the document in question. End

|

||||

the path with `.png`. A member of the Netdata team will assist in creating the image when publishing the content.

|

||||

|

||||

For example, here is the frontmatter for the guide

|

||||

about [deploying the Netdata Agent with Ansible](https://github.com/netdata/netdata/blob/master/packaging/installer/methods/ansible.md).

|

||||

|

||||

<img width="751" alt="image" src="https://user-images.githubusercontent.com/43294513/217607958-ef0f270d-7947-4d91-a9a5-56b17b4255ee.png"/>

|

||||

|

||||

Questions about frontmatter in

|

||||

documentation? [Ask on our community forum](https://community.netdata.cloud/c/blog-posts-and-articles/6).

|

||||

|

||||

### Linking between documentation

|

||||

|

||||

Documentation should link to relevant pages whenever it's relevant and provides valuable context to the reader.

|

||||

|

|

|

|||

|

|

@ -1,19 +1,6 @@

|

|||

<!--

|

||||

title: "Customize the standard dashboard"

|

||||

description: >-

|

||||

"Netdata's preconfigured dashboard offers many customization options, such as choosing when

|

||||

charts are updated, your preferred theme, and custom text to document processes, and more."

|

||||

type: "how-to"

|

||||

custom_edit_url: "https://github.com/netdata/netdata/blob/master/docs/dashboard/customize.md"

|

||||

sidebar_label: "Customize the standard dashboard"

|

||||

learn_status: "Published"

|

||||

learn_topic_type: "Tasks"

|

||||

learn_rel_path: "Operations"

|

||||

-->

|

||||

|

||||

# Customize the standard dashboard

|

||||

|

||||

While the [Netdata dashboard](https://github.com/netdata/netdata/blob/master/docs/dashboard/how-dashboard-works.md) comes preconfigured with hundreds of charts and

|

||||

While the [Netdata dashboard](https://github.com/netdata/netdata/blob/master/web/gui/README.md) comes preconfigured with hundreds of charts and

|

||||

thousands of metrics, you may want to alter your experience based on a particular use case or preferences.

|

||||

|

||||

## Dashboard settings

|

||||

|

|

|

|||

|

|

@ -67,12 +67,3 @@ names:

|

|||

| `disk.ops` | `disk_ops.sda` | `disk_ops.sdb` |

|

||||

| `disk.backlog` | `disk_backlog.sda` | `disk_backlog.sdb` |

|

||||

| `disk.util` | `disk_util.sda` | `disk_util.sdb` |

|

||||

|

||||

## What's next?

|

||||

|

||||

With an understanding of a chart's dimensions, context, and family, you're now ready to dig even deeper into Netdata's

|

||||

dashboard. We recommend looking into [using the timeframe selector](https://github.com/netdata/netdata/blob/master/docs/dashboard/visualization-date-and-time-controls.md).

|

||||

|

||||

If you feel comfortable with the [dashboard](https://github.com/netdata/netdata/blob/master/docs/dashboard/how-dashboard-works.md) and interacting with charts, we

|

||||

recommend learning about [configuration](https://github.com/netdata/netdata/blob/master/docs/configure/nodes.md). While Netdata doesn't _require_ a complicated setup

|

||||

process or a query language to create charts, there are a lot of ways to tweak the experience to match your needs.

|

||||

|

|

|

|||

|

|

@ -1,99 +0,0 @@

|

|||

<!--

|

||||

title: "How the dashboard works"

|

||||

description: >-

|

||||

"Learn how to navigate Netdata's preconfigured dashboard to get started

|

||||

exploring, visualizing, and troubleshooting in real time."

|

||||

type: "explanation"

|

||||

custom_edit_url: "https://github.com/netdata/netdata/blob/master/docs/dashboard/how-dashboard-works.md"

|

||||

sidebar_label: "How the dashboard works"

|

||||

learn_status: "Published"

|

||||

learn_topic_type: "Concepts"

|

||||

learn_rel_path: "Concepts"

|

||||

-->

|

||||

|

||||

# How the dashboard works

|

||||

|

||||

Because Netdata is a monitoring and _troubleshooting_ platform, a dashboard with real-time, meaningful, and

|

||||

context-aware charts is essential.

|

||||

|

||||

As soon as you [install Netdata](https://github.com/netdata/netdata/blob/master/packaging/installer/README.md), it autodetects hardware, OS, containers, services, and

|

||||

applications running on your node and builds a dashboard on a single, scrollable webpage. This page features hundreds of

|

||||

charts, which are preconfigured to save you time from learning a query language, all stacked on top of one another. This

|

||||

vertical rhythm is designed to encourage exploration and help you visually identify connections between the metrics

|

||||

visualized in different charts.

|

||||

|

||||

It's essential to understand the core concepts and features of Netdata's dashboard if you want to maximize your Netdata

|

||||

experience right after installation.

|

||||

|

||||

## Open the dashboard

|

||||

|

||||

Access Netdata's dashboard by navigating to `http://NODE:19999` in your browser, replacing `NODE` with either

|

||||

`localhost` or the hostname/IP address of a remote node.

|

||||

|

||||

|

||||

|

||||

Many features of the internal web server that serves the dashboard are [configurable](https://github.com/netdata/netdata/blob/master/web/server/README.md), including

|

||||

the listen port, enforced TLS, and even disabling the dashboard altogether.

|

||||

|

||||

## Sections and menus

|

||||

|

||||

As mentioned in the introduction, Netdata automatically organizes all the metrics it collects from your node, and places

|

||||

them into **sections** of closely related charts.

|

||||

|

||||

The first section on any dashboard is the **System Overview**, followed by **CPUs**, **Memory**, and so on.

|

||||

|

||||

These sections populate the **menu**, which is on the right-hand side of the dashboard. Instead of manually scrolling up

|

||||

and down to explore the dashboard, it's generally faster to click on the relevant menu item to jump to that position on

|

||||

the dashboard.

|

||||

|

||||

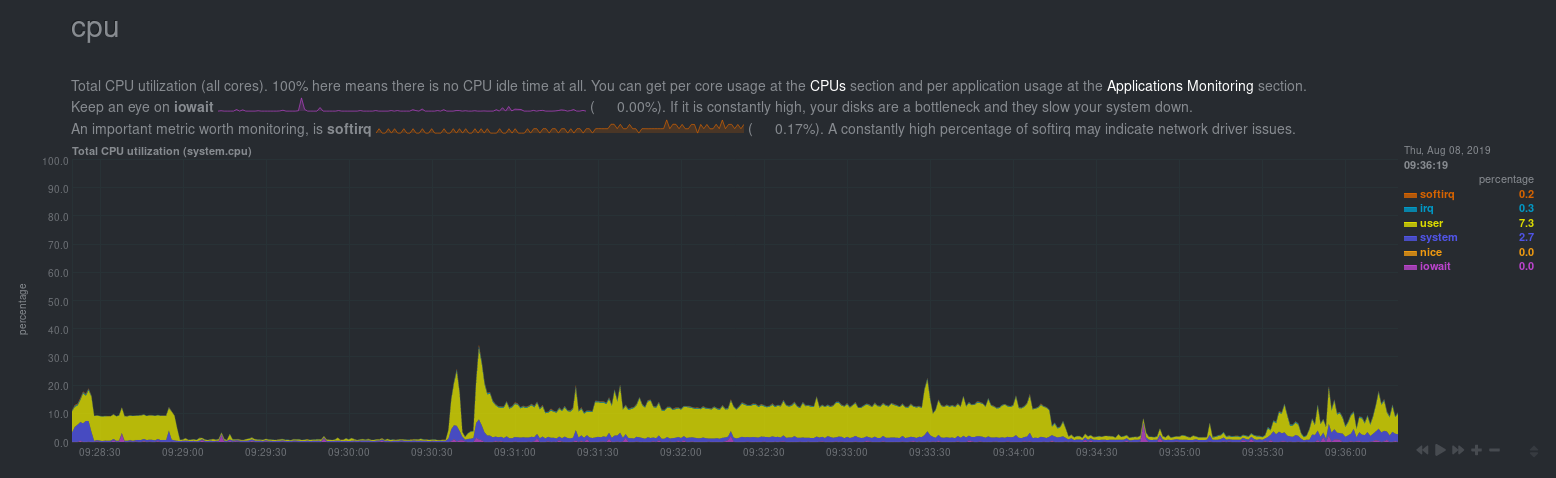

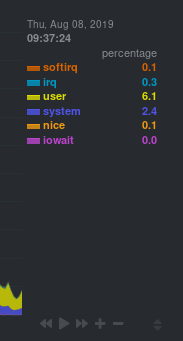

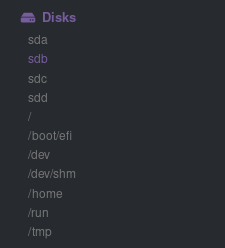

Many menu items also contain a **submenu**, with links to additional categories. For example, the **Disks** section is often separated into multiple groups based on the number of disk drives/partitions on your node, which are also known as a family.

|

||||

|

||||

|

||||

|

||||

## Charts

|

||||

|

||||

Every **chart** in the Netdata dashboard is fully interactive. Netdata

|

||||

synchronizes your interactions to help you understand exactly how a node behaved in any timeframe, whether that's

|

||||

seconds or days.

|

||||

|

||||

A chart is an individual, interactive, always-updating graphic displaying one or more collected/calculated metrics,

|

||||

which are generated by [collectors](https://github.com/netdata/netdata/blob/master/collectors/README.md).

|

||||

|

||||

|

||||

|

||||

Hover over any chart to temporarily pause it and see the exact metrics values presented as different dimensions. Click

|

||||

or tap to stop the chart from automatically updating with new metrics, thereby locking it to a single timeframe.

|

||||

Double-click it to resume auto-updating.

|

||||

|

||||

Let's cover two of the most important ways to interact with charts: panning through time and zooming.

|

||||

|

||||

To pan through time, **click and hold** (or touch and hold) on any chart, then **drag your mouse** (or finger) to the

|

||||

left or right. Drag to the right to pan backward through time, or drag to the left to pan forward in time. Think of it

|

||||

like pushing the current timeframe off the screen to see what came before or after.

|

||||

|

||||

To zoom, press and hold `Shift`, then use your mouse's scroll wheel, or a two-finger pinch if you're using a touchpad.

|

||||

|

||||

See [interact with charts](https://github.com/netdata/netdata/blob/master/docs/cloud/visualize/interact-new-charts.md) for all the possible ways to interact with the charts on

|

||||

your dashboard.

|

||||

|

||||

## Alarms

|

||||

|

||||

Many of the preconfigured charts on the Netdata dashboard also come with preconfigured alarms. Netdata sends three

|

||||

primary alarm states via alarms: `CLEAR`, `WARNING`, and `CRITICAL`. If an alarm moves from a `CLEAR` state to either

|

||||

`WARNING` or `CRITICAL`, Netdata creates a notification to let you know exactly what's going on. There are [other alarm

|

||||

states](https://github.com/netdata/netdata/blob/master/health/REFERENCE.md#alarm-statuses) as well.

|

||||

|

||||

The easiest way to see alarms is by clicking on the alarm icon

|

||||

in the top panel to open the alarms panel, which shows you all the active alarms. The other **All** tab shows every

|

||||

active alarm, and the **Log** tab shows a historical record of exactly when alarms triggered and to which state.

|

||||

|

||||

|

||||

|

||||

Learn more about [viewing active alarms](https://github.com/netdata/netdata/blob/master/docs/monitor/view-active-alarms.md), [configuring

|

||||

alarms](https://github.com/netdata/netdata/blob/master/health/REFERENCE.md), or [enabling a new notification

|

||||

method](https://github.com/netdata/netdata/blob/master/docs/monitor/enable-notifications.md).

|

||||

179

docs/glossary.md

Normal file

179

docs/glossary.md

Normal file

|

|

@ -0,0 +1,179 @@

|

|||

# Glossary

|

||||

|

||||

The Netdata community welcomes engineers, SREs, admins, etc. of all levels of expertise with engineering and the Netdata tool. And just as a journey of a thousand miles starts with one step, sometimes, the journey to mastery begins with understanding a single term.

|

||||

|

||||

As such, we want to provide a little Glossary as a reference starting point for new users who might be confused about the Netdata vernacular that more familiar users might take for granted.

|

||||

|

||||

If you're here looking for the definition of a term you heard elsewhere in our community or products, or if you just want to learn Netdata from the ground up, you've come to the right page.

|

||||

|

||||

Use the alphabatized list below to find the answer to your single-term questions, and click the bolded list items to explore more on the topics! We'll be sure to keep constantly updating this list, so if you hear a word that you would like for us to cover, just let us know or submit a request!

|

||||

|

||||

[A](#a) | [B](#b) | [C](#c) | [D](#d)| [E](#e) | [F](#f) | [G](#g) | [H](#h) | [I](#i) | [J](#j) | [K](#k) | [L](#l) | [M](#m) | [N](#n) | [O](#o) | [P](#p)

|

||||

| [Q](#q) | [R](#r) | [S](#s) | [T](#t) | [U](#u) | [V](#v) | [W](#w) | [X](#x) | [Y](#y) | [Z](#z)

|

||||

|

||||

## A

|

||||

|

||||

- [**Agent** or **Netdata Agent**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/overview.md): Netdata's distributed monitoring Agent collects thousands of metrics from systems, hardware, and applications with zero configuration. It runs permanently on all your physical/virtual servers, containers, cloud deployments, and edge/IoT devices.

|

||||

|

||||

- [**Agent-cloud link** or **ACLK**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/aclk.md): The Agent-Cloud link (ACLK) is the mechanism responsible for securely connecting a Netdata Agent to your web browser through Netdata Cloud.

|

||||

|

||||

- [**Aggregate Function**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-cloud/netdata-views.md#aggregate-functions-over-data-sources): A function applied When the granularity of the data collected is higher than the plotted points on the chart.

|

||||

|

||||

- [**Alerts** (formerly **Alarms**)](https://github.com/netdata/netdata/blob/master/docs/concepts/health-monitoring/alerts.md): With the information that appears on Netdata Cloud and the local dashboard about active alerts, you can configure alerts to match your infrastructure's needs or your team's goals.

|

||||

|

||||

- [**Alarm Entity Type**](https://github.com/netdata/netdata/blob/master/docs/concepts/health-monitoring/alerts.md#entity-types): Entity types that are attached to specific charts and use the `alarm` label.

|

||||

|

||||

- [**Anomaly Advisor**](https://github.com/netdata/netdata/blob/master/docs/concepts/guided-troubleshooting/machine-learning-powered-anomaly-advisor.md): A Netdata feature that lets you quickly surface potentially anomalous metrics and charts related to a particular highlight window of interest.

|

||||

|

||||

## B

|

||||

|

||||

- [**Bookmarks**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-cloud/rooms.md#bookmarks-for--essential-resources): Netdata Cloud's bookmarks put your tools in one accessible place. Bookmarks are shared between all War Rooms in a Space, so any users in your Space will be able to see and use them.

|

||||

|

||||

## C

|

||||

|

||||

- [**Child**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/metrics-streaming-replication.md#streaming-basics): A node, running Netdata, that streams metric data to one or more parent.

|

||||

|

||||

- [**Cloud** or **Netdata Cloud**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-cloud/overview.md): Netdata Cloud is a web application that gives you real-time visibility for your entire infrastructure. With Netdata Cloud, you can view key metrics, insightful charts, and active alarms from all your nodes in a single web interface.

|

||||

|

||||

- [**Collector**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/metrics-collection.md#collector-architecture-and-terminology): A catch-all term for any Netdata process that gathers metrics from an endpoint.

|

||||

|

||||

- [**Community**](https://github.com/netdata/netdata/blob/master/docs/getting-started/introduction.md#community): As a company with a passion and genesis in open-source, we are not just very proud of our community, but we consider our users, fans, and chatters to be an imperative part of the Netdata experience and culture.

|

||||

|

||||

- [**Composite Charts**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-cloud/netdata-views.md#composite-charts): Charts used by the **Overview** tab which aggregate metrics from all the nodes (or a filtered selection) in a given War Room.

|

||||

|

||||

- [**Context**](https://github.com/netdata/netdata/blob/master/docs/concepts/visualizations/from-raw-metrics-to-visualization.md#context): A way of grouping charts by the types of metrics collected and dimensions displayed. It's kind of like a machine-readable naming and organization scheme.

|

||||

|

||||

- [**Custom dashboards**](https://github.com/netdata/netdata/blob/master/docs/concepts/visualizations/dashboards.md#custom-dashboards) A dashboard that you can create using simple HTML (no javascript is required for basic dashboards).

|

||||

|

||||

## D

|

||||

|

||||

- [**Dashboards**](https://github.com/netdata/netdata/blob/master/docs/concepts/visualizations/dashboards.md): Out-of-the box visual presentation of metrics that allows you to make sense of your infrastructure and its health and performance.

|

||||

|

||||

- [**Definition Bar**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-cloud/netdata-views.md#composite-charts): Bar within a composite chart that provides important information and options about the metrics within the chart.

|

||||

|

||||

- [**Dimension** or **Group by**](https://github.com/netdata/netdata/blob/master/docs/concepts/visualizations/from-raw-metrics-to-visualization.md#dimension): A dimension is a value that gets shown on a chart. The drop-down on the dimension bar of a composite chart that allows you to group metrics by dimension, node, or chart.

|

||||

|

||||

- [**Distributed Architecture**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-architecture/distributed-data-architecture.md): The data architecture mindset with which Netdata was built, where all data are collected and stored on the edge, whenever it's possible, creating countless benefits.

|

||||

|

||||

## E

|

||||

|

||||

- [**External Plugins**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/metrics-collection.md#collector-architecture-and-terminology): These gather metrics from external processes, such as a webserver or database, and run as independent processes that communicate with the Netdata daemon via pipes.

|

||||

|

||||

## F

|

||||

|

||||

- [Family](https://github.com/netdata/netdata/blob/master/docs/concepts/visualizations/from-raw-metrics-to-visualization.md#family): 1. What we consider our Netdata community of users and engineers. 2. A single instance of a hardware or software resource that needs to be displayed separately from similar instances.

|

||||

|

||||

- [**Flood Protection**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-cloud/netdata-views.md#group-by-dimension-node-or-chart): If a node has too many state changes like firing too many alerts or going from reachable to unreachable, Netdata Cloud enables flood protection. As long as a node is in flood protection mode, Netdata Cloud does not send notifications about this node

|

||||

|

||||

- [**Functions** or **Netdata Functions**](https://github.com/netdata/netdata/blob/master/docs/concepts/guided-troubleshooting/netdata-functions.md): Routines exposed by a collector on the Netdata Agent that can bring additional information to support troubleshooting or trigger some action to happen on the node itself.

|

||||

|

||||

## G

|

||||

|

||||

- [**Guided Troubleshooting**](https://github.com/netdata/netdata/blob/master/docs/concepts/guided-troubleshooting/Overview.md): Troubleshooting with our Machine-Learning-powered tools designed to give you a cutting edge advantage in your troubleshooting battles.

|

||||

|

||||

- [**Group by** or **Dimension**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-cloud/netdata-views.md#group-by-dimension-node-or-chart): A dimension is a value that gets shown on a chart. The drop-down on the dimension bar of a composite chart that allows you to group metrics by dimension, node, or chart.

|

||||

|

||||

## H

|

||||

|

||||

- [**Headless Collector Streaming**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/metrics-streaming-replication.md): Streaming configuration where child `A`, _without_ a database or web dashboard, streams metrics to parent `B`.

|

||||

|

||||

- [**Health Configuration Files**](https://github.com/netdata/netdata/blob/master/docs/concepts/health-monitoring/alerts.md#health-configuration-files): Files that you can edit to configure your Agent's health watchdog service.

|

||||

|

||||

- [**Health Entity Reference**](https://github.com/netdata/netdata/blob/master/docs/concepts/health-monitoring/alerts.md#health-entity-reference):

|

||||

|

||||

- [**High Fidelity** or **High Fidelity Architecture**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-architecture/high-fidelity-monitoring.md): We consider Netdata's monitoring solution "high fidelity" because it provides real time metrics so you can view metrics/changes in seconds since their occur, the highest resolution of metrics to allow you to observe changes occur between seconds, gixed step metric collection to allow you to quantify your observation windows, and unlimited data to search for patterns in data that you don't even believe they are correlated.

|

||||

|

||||

- [**Home** tab](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-cloud/netdata-views.md#home): Tab in Netdata Cloud that provides a predefined dashboard of relevant information about entities in the War Room.

|

||||

|

||||

## I

|

||||

|

||||

- [**Internal plugins**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/metrics-collection.md#collector-architecture-and-terminology): These gather metrics from `/proc`, `/sys`, and other Linux kernel sources. They are written in `C` and run as threads within the Netdata daemon.

|

||||

|

||||

## K

|

||||

|

||||

- [**Kickstart** or **Kickstart Script**](https://github.com/netdata/netdata/blob/master/packaging/installer/methods/kickstart.md): An automatic one-line installation script named 'kickstart.sh' that works on all Linux distributions and macOS.

|

||||

|

||||

- [**Kubernetes Dashboard** or **Kubernetes View**](https://github.com/netdata/netdata/blob/master/docs/concepts/visualizations/dashboards.md#kubernetes-dashboard): Netdata Cloud features enhanced visualizations for the resource utilization of Kubernetes (k8s) clusters, embedded in the default Overview dashboard.

|

||||

## M

|

||||

|

||||

- [**Metrics Collection**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/metrics-collection.md): With zero configuration, Netdata auto-detects thousands of data sources upon starting and immediately collects per-second metrics. Netdata can immediately collect metrics from these endpoints thanks to 300+ collectors, which all come pre-installed when you install Netdata.

|

||||

|

||||

- [**Metric Correlations**](https://github.com/netdata/netdata/blob/master/docs/concepts/guided-troubleshooting/metric-correlations.md): A Netdata feature that lets you quickly find metrics and charts related to a particular window of interest that you want to explore further.

|

||||

|

||||

- [**Metrics Exporting**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/metrics-exporting.md): Netdata allows you to export metrics to external time-series databases with the exporting engine. This system uses a number of connectors to initiate connections to more than thirty supported databases, including InfluxDB, Prometheus, Graphite, ElasticSearch, and much more.

|

||||

|

||||

- [**Metrics Storage**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/metrics-storage.md): Upon collection the collected metrics need to be either forwarded, exported or just stored for further treatment. The Agent is capable to store metrics both short and long-term, with or without the usage of non-volatile storage.

|

||||

|

||||

- [**Metrics Streaming Replication**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/metrics-streaming-replication.md): Each node running Netdata can stream the metrics it collects, in real time, to another node. Metric streaming allows you to replicate metrics data across multiple nodes, or centralize all your metrics data into a single time-series database (TSDB).

|

||||

|

||||

- [**Module**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/metrics-collection.md#collector-architecture-and-terminology): A type of collector.

|

||||

|

||||

## N

|

||||

|

||||

- [**Netdata**](https://github.com/netdata/netdata/blob/master/docs/getting-started/introduction.md): Netdata is a monitoring tool designed by system administrators, DevOps engineers, and developers to collect everything, help you visualize

|

||||

metrics, troubleshoot complex performance problems, and make data interoperable with the rest of your monitoring stack.

|

||||

|

||||

- [**Netdata Agent** or **Agent**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/overview.md): Netdata's distributed monitoring Agent collects thousands of metrics from systems, hardware, and applications with zero configuration. It runs permanently on all your physical/virtual servers, containers, cloud deployments, and edge/IoT devices.

|

||||

|

||||

- [**Netdata Cloud** or **Cloud**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/overview.md): Netdata Cloud is a web application that gives you real-time visibility for your entire infrastructure. With Netdata Cloud, you can view key metrics, insightful charts, and active alarms from all your nodes in a single web interface.

|

||||

|

||||

- [**Netdata Functions** or **Functions**](https://github.com/netdata/netdata/blob/master/docs/concepts/guided-troubleshooting/netdata-functions.md): Routines exposed by a collector on the Netdata Agent that can bring additional information to support troubleshooting or trigger some action to happen on the node itself.

|

||||

|

||||

<!-- No link for this keyword - [**Netdata Logs**](https://github.com/netdata/netdata/blob/master/docs/tasks/miscellaneous/check-netdata-logs.md): The three log files - `error.log`, `access.log` and `debug.log` - used by Netdata -->

|

||||

|

||||

- [**Notifications**](https://github.com/netdata/netdata/blob/master/docs/concepts/health-monitoring/notifications.md): Netdata can send centralized alert notifications to your team whenever a node enters a warning, critical, or unreachable state. By enabling notifications, you ensure no alert, on any node in your infrastructure, goes unnoticed by you or your team.

|

||||

|

||||

## O

|

||||

|

||||

- [**Obsoletion**(of nodes)](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-cloud/spaces.md#obsolete-offline-nodes): Removing nodes from a space.

|

||||

|

||||

- [**Orchestrators**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/metrics-collection.md#collector-architecture-and-terminology): External plugins that run and manage one or more modules. They run as independent processes.

|

||||

|

||||

- [**Overview** tab](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-cloud/netdata-views.md#overview): Tab in Netdata Cloud that uses composite charts. These charts display real-time aggregated metrics from all the nodes (or a filtered selection) in a given War Room.

|

||||

|

||||

## P

|

||||

|

||||

- [**Parent**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/metrics-streaming-replication.md#streaming-basics): A node, running Netdata, that receives streamed metric data.

|

||||

|

||||

- [**Proxy**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/metrics-streaming-replication.md#streaming-basics): A node, running Netdata, that receives metric data from a child and "forwards" them on to a separate parent node.

|

||||

|

||||

- [**Proxy Streaming**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/metrics-streaming-replication.md#supported-streaming-configurations): Streaming configuration where child `A`, _with or without_ a database, sends metrics to proxy `C`, also _with or without_ a database. `C` sends metrics to parent `B`

|

||||

|

||||

## R

|

||||

|

||||

- [**Registry**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/registry.md): Registry that allows Netdata to provide unified cross-server dashboards.

|

||||

|

||||

- [**Replication Streaming**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/metrics-streaming-replication.md): Streaming configuration where child `A`, _with_ a database and web dashboard, streams metrics to parent `B`.

|

||||

|

||||

- [**Room** or **War Room**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-cloud/rooms.md): War Rooms organize your connected nodes and provide infrastructure-wide dashboards using real-time metrics and visualizations.

|

||||

|

||||

## S

|

||||

|

||||

- [**Single Node Dashboard**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-cloud/netdata-views.md#jump-to-single-node-dashboards-1): A dashboard pre-configured with every installation of the Netdata agent, with thousand of metrics and hundreds of interactive charts that requires no set up.

|

||||

|

||||

<!-- No link for this file in current structure. - [**Snapshots**](https://github.com/netdata/netdata/blob/master/docs/tasks/miscellaneous/snapshot-data.md): An image of your dashboard at any given time, whicn can be imiported into any other node running Netdata or used to genereated a PDF file for your records. -->

|

||||

|

||||

- [**Space**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-cloud/spaces.md): A high-level container and virtual collaboration area where you can organize team members, access levels,and the nodes you want to monitor.

|

||||

|

||||

## T

|

||||

|

||||

- [**Template Entity Type**](https://github.com/netdata/netdata/blob/master/docs/concepts/health-monitoring/alerts.md#entity-types): Entity type that defines rules that apply to all charts of a specific context, and use the template label. Templates help you apply one entity to all disks, all network interfaces, all MySQL databases, and so on.

|

||||

|

||||

- [**Tiering**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-agent/metrics-storage.md#tiering): Tiering is a mechanism of providing multiple tiers of data with different granularity of metrics (the frequency they are collected and stored, i.e. their resolution).

|

||||

|

||||

## U

|

||||

|

||||

- [**Unlimited Scalability**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-architecture/unlimited-scalability.md): With Netdata's distributed architecture, you can seamless observe a couple, hundreds or

|

||||

even thousands of nodes. There are no actual bottlenecks especially if you retain metrics locally in the Agents.

|

||||

|

||||

## V

|

||||

|

||||

- [**Visualizations**](https://github.com/netdata/netdata/blob/master/docs/concepts/visualizations/from-raw-metrics-to-visualization.md): Netdata uses dimensions, contexts, and families to sort your metric data into graphs, charts, and alerts that maximize your understand of your infrastructure and your ability to troubleshoot it, along or on a team.

|

||||

|

||||

## W

|

||||

|

||||

- [**War Room** or **Room**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-cloud/rooms.md): War Rooms organize your connected nodes and provide infrastructure-wide dashboards using real-time metrics and visualizations.

|

||||

|

||||

## Z

|

||||

|

||||

- [**Zero Configuration**](https://github.com/netdata/netdata/blob/master/docs/concepts/netdata-architecture/zero-configuration.md): Netdata is preconfigured and capable to autodetect and monitor any well known application that runs on your system. You just deploy and claim Netdata Agents in your Netdata space, and monitor them in seconds.

|

||||

|

|

@ -1,12 +1,4 @@

|

|||

<!--

|

||||

title: "Contribute to the documentation"

|

||||

sidebar_label: "Contribute to the documentation"

|

||||

custom_edit_url: "https://github.com/netdata/netdata/blob/master/docs/guidelines.md"

|

||||

sidebar_position: "10"

|

||||

learn_status: "Published"

|

||||

learn_topic_type: "Custom"

|

||||

learn_rel_path: "Contribute"

|

||||

-->

|

||||

# Contribute to the documentation

|

||||

|

||||

import Tabs from '@theme/Tabs'; import TabItem from '@theme/TabItem';

|

||||

|

||||

|

|

@ -17,750 +9,59 @@ docs (**learn.netdata.cloud**)

|

|||

|

||||

## Documentation architecture

|

||||

|

||||

Netdata docs follows has two principals.

|

||||

Our documentation in https://learn.netdata.cloud is generated by markdown documents in the public

|

||||

Github repositories of the "netdata" organization.

|

||||

|

||||

1. Keep the documentation of each component _as close as you can to the codebase_

|

||||

2. Every component is analyzed via topic related docs.

|

||||

|

||||

To this end:

|

||||

|

||||

1. Documentation lives in every possible repo in the netdata organization. At the moment we contribute to:

|

||||

- netdata/netdata

|

||||

- netdata/learn (final site)

|

||||

- netdata/go.d.plugin

|

||||

- netdata/agent-service-discovery

|

||||

|

||||

In each of these repos you will find markdown files. These markdown files may or not be part of the final docs. You

|

||||

understand what documents are part of the final docs in the following section:[_How to update documentation of

|

||||

learn.netdata.cloud_](#how-to-update-documentation-of-learn-netdata-cloud)

|

||||

|

||||

2. Netdata docs processes are inspired from

|

||||

the [DITA 1.2 guidelines](http://docs.oasis-open.org/dita/v1.2/os/spec/archSpec/dita-1.2_technicalContent_overview.html)

|

||||

for Technical content.

|

||||

|

||||

## Topic types

|

||||

|

||||

### Concepts

|

||||

|

||||

A concept introduces a single feature or concept. A concept should answer the questions:

|

||||

|

||||

- What is this?

|

||||

- Why would I use it?

|

||||

|

||||

Concept topics:

|

||||

|

||||

- Are abstract ideas

|

||||

- Explain meaning or benefit

|

||||

- Can stay when specifications change

|

||||

- Provide background information

|

||||

|

||||

### Tasks

|

||||

|

||||

Concept and reference topics exist to support tasks. _The goal for users … is not to understand a concept but to

|

||||

complete a task_. A task gives instructions for how to complete a procedure.

|

||||

|

||||

Much of the uncertainty whether a topic is a concept or a reference disappears, when you have strong, solid task topics

|

||||

in place, furthermore topics directly address your users and their daily tasks and help them to get their job done. A

|

||||

task **must give an answer** to the **following questions**:

|

||||

|

||||

- How do I create cool espresso drinks with my new coffee machine?

|

||||

- How do I clean the milk steamer?

|

||||

|

||||

For the title text, use the structure active verb + noun. For example, for instance _Deploy the Agent_.

|

||||

|

||||

### References

|

||||

|

||||

The reference document and information types provide for the separation of fact-based information from concepts and

|

||||

tasks. \

|

||||

Factual information may include tables and lists of specifications, parameters, parts, commands, edit-files and other

|

||||

information that the users are likely to look up. The reference information type allows fact-based content to be

|

||||

maintained by those responsible for its accuracy and consistency.

|

||||

|

||||

## Contribute to the documentation of learn.netdata.cloud

|

||||

|

||||

### Encapsulate topics into markdown files.

|

||||

|

||||

Netdata uses markdown files to document everything. To implement concrete sections of these [Topic types](#topic-types)

|

||||

we encapsulate this logic as follows. Every document is characterized by its topic type ('learn_topic_type' metadata

|

||||

field). To avoid breaking every single netdata concept into numerous small markdown files each document can be either a

|

||||

single `Reference` or `Concept` or `Task` or a group of `References`, `Concepts`, `Tasks`.

|

||||

|

||||

To this end, every single topic is encapsulated into a `Heading 3 (###)` section. That means, when you have a single

|

||||

file you only make use of `Headings 4` and lower (`4, 5, 6`, for templated section or subsection). In case you want to

|

||||

includ multiple (`Concepts` let's say) in a single document, you use `Headings 3` to seperate each concept. `Headings 2`

|

||||

are used only in case you want to logically group topics inside a document.

|

||||

|

||||

For instance:

|

||||

|

||||

```markdown

|

||||

|

||||

Small introduction of the document.

|

||||

|

||||

### Concept A

|

||||

|

||||

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna

|

||||

aliqua.

|

||||

|

||||

#### Field from template 1

|

||||

|

||||

Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.

|

||||

|

||||

#### Field from template 1

|

||||

|

||||

Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

|

||||

|

||||

##### Subsection 1

|

||||

|

||||

. . .

|

||||

|

||||

### Concept A

|

||||

|

||||

Excepteur sint occaecat cupidatat non proident, sunt in culpa qui officia deserunt mollit anim id est laborum.

|

||||

|

||||

#### Field from template 1

|

||||

|

||||

. . .

|

||||

|

||||

|

||||

```

|

||||

|

||||

This approach gives a clean and readable outlook in each document from a single sidebar.

|

||||

|

||||

Here you can find the preferred templates for each topic type:

|

||||

|

||||

|

||||

<Tabs>

|

||||

<TabItem value="Concept" label="Concept" default>

|

||||

|

||||

```markdown

|

||||

Small intro, give some context to the user of what you will cover on this document

|

||||

|

||||

### concept title (omit if the document describes only one concept)

|

||||

|

||||

A concept introduces a single feature or concept. A concept should answer the questions:

|

||||

|

||||

1. What is this?

|

||||

2. Why would I use it?

|

||||

|

||||

```

|

||||

|

||||

</TabItem>

|

||||

<TabItem value="Task" label="Tasks">

|

||||

|

||||

```markdown

|

||||

Small intro, give some context to the user of what you will cover on this document

|

||||

|

||||

### Task title (omit if the document describes only one task)

|

||||

|

||||

#### Prerequisite

|

||||

|

||||

Unordered list of what you will need.

|

||||

|

||||

#### Steps

|

||||

|

||||

Exact list of step the user must follow

|

||||

|

||||

#### Expected result

|

||||

|

||||

What you expect to see when you complete the steps above

|

||||

|

||||

#### Example

|

||||

|

||||

Example configuration/actions of the task

|

||||

|

||||

#### Related reference documentation

|

||||

|

||||

List of reference docs user needs to be aware of.

|

||||

```

|

||||

|

||||

</TabItem>

|

||||

<TabItem value="Reference-collectors" label="Reference-collectors">

|

||||

|

||||

```markdown

|

||||

Small intro, give some context to the user of what you will cover on this document

|

||||

|

||||

### Reference name (omit if the document describes only one reference)

|

||||

|

||||

#### Requirements

|

||||

|

||||

Document any dependencies needed to run this module

|

||||

|

||||

#### Requirements on the monitored component

|

||||

|

||||

Document any steps user must take to sucessful monitor application,

|

||||

for instance (create a user)

|

||||

|

||||

#### Configuration files

|

||||

|

||||

table with path and configuration files purpose

|

||||

Columns: File name | Description (Purpose in a nutshell)

|

||||

|

||||

#### Data collection

|

||||

|

||||

To make changes, see `the ./edit-config task <link>`

|

||||

|

||||

#### Auto discovery

|

||||

|

||||

##### Single node installation

|

||||

|

||||

. . . we autodetect localhost:port and what configurations are defaults

|

||||

|

||||

##### Kubernetes installations

|

||||

|

||||

. . . Service discovery, click here

|

||||

|

||||

#### Metrics

|

||||

|

||||

Columns: Metric (Context) | Scope | description (of the context) | dimensions | units (of the context) | Alert triggered

|

||||

|

||||

|

||||

#### Alerts

|

||||

|

||||

Collapsible content for every alert, just like the alert guides

|

||||

|

||||

#### Configuration options

|

||||

|

||||

Table with all the configuration options available.

|

||||

|

||||

Columns: name | description | default | file_name

|

||||

|

||||

#### Configuration example

|

||||

|

||||

Default configuration example

|

||||

|

||||

#### Troubleshoot

|

||||

|

||||

backlink to the task to run this module in debug mode (here you provide the debug flags)

|

||||

|

||||

|

||||

```

|

||||

|

||||

</TabItem>

|

||||

</Tabs>

|

||||

|

||||

### Metadata fields

|

||||

|

||||

All Docs that are supposed to be part of learn.netdata.cloud have **hidden** sections in the begining of document. These

|

||||

sections are plain lines of text and we call them metadata. Their represented as `key : "Value"` pairs. Some of them are

|

||||

needed from our statice website builder (docusaurus) others are needed for our internal pipelines to build docs

|

||||

(have prefix `learn_`).

|

||||

|

||||

So let's go through the different necessary metadata tags to get a document properly published on Learn:

|

||||

|

||||

| metadata_key | Value(s) | Frontmatter effect | Mandatory | Limitations |

|

||||

|:---------------------:|---------------------------------------------------------------------------------------------------------------|:-----------------------------------------------------------------------------------------------------------------------------------------------------------:|:---------:|:---------------------------------------:|

|

||||

| `title` | `String` | Title in each document | yes | |

|

||||

| `custom_edit_url` | `String` | The source GH link of the file | yes | |

|

||||

| `description` | `String or multiline String` | - | yes | |

|

||||

| `sidebar_label` | `String or multiline String` | Name in the TOC tree | yes | |

|

||||

| `sidebar_position` | `String or multiline String` | Global position in the TOC tree (local for per folder) | yes | |

|

||||

| `learn_status` | [`Published`, `Unpublished`, `Hidden`] | `Published`: Document visible in learn,<br/> `Unpublished`: Document archived in learn, <br/>`Hidden`: Documentplaced under learn_rel_path but it's hidden] | yes | |

|

||||

| `learn_topic_type` | [`Concepts`, `Tasks`, `References`, `Getting Started`] | | yes | |

|

||||

| `learn_rel_path` | `Path` (the path you want this file to appear in learn<br/> without the /docs prefix and the name of the file | | yes | |

|

||||

| `learn_autogenerated` | `Dictionary` (for internal use) | | no | Keys in the dictionary must be in `' '` |

|

||||

|

||||

:::important

|

||||

|

||||

1. In case any mandatory tags are missing or falsely inputted the file will remain unpublished. This is by design to

|

||||

prevent non-properly tagged files from getting published.

|

||||

2. All metadata values must be included in `" "`. From `string` noted text inside the fields use `' ''`

|

||||

|

||||

|

||||

While Docusaurus can make use of more metadata tags than the above, these are the minimum we require to publish the file

|

||||

on Learn.

|

||||

|

||||

:::

|

||||

|

||||

### Placing a document in learn

|

||||

|

||||

Here you can see how the metadata are parsed and create a markdown file in learn.

|

||||

|

||||

|

||||

|

||||

### Before you get started

|

||||

|

||||

Anyone interested in contributing to documentation should first read the [Netdata style guide](#styling-guide) further

|

||||

down below and the [Netdata Community Code of Conduct](https://github.com/netdata/.github/blob/main/CODE_OF_CONDUCT.md).

|

||||

|

||||

Netdata's documentation uses Markdown syntax. If you're not familiar with Markdown, read

|

||||

the [Mastering Markdown](https://guides.github.com/features/mastering-markdown/) guide from GitHub for the basics on

|

||||

creating paragraphs, styled text, lists, tables, and more, and read further down about some special

|

||||

occasions [while writing in MDX](#mdx-and-markdown).

|

||||

|

||||

### Making your first contribution

|

||||

### Improve existing documentation

|

||||

|

||||

The easiest way to contribute to Netdata's documentation is to edit a file directly on GitHub. This is perfect for small

|

||||

fixes to a single document, such as fixing a typo or clarifying a confusing sentence.

|

||||

|

||||

Click on the **Edit this page** button on any published document on [Netdata Learn](https://learn.netdata.cloud). Each

|

||||

page has two of these buttons: One beneath the table of contents, and another at the end of the document, which take you

|

||||

to GitHub's code editor. Make your suggested changes, keeping the [Netdata style guide](#styling-guide)

|

||||

in mind, and use the ***Preview changes*** button to ensure your Markdown syntax works as expected.

|

||||

Each published document on [Netdata Learn](https://learn.netdata.cloud) includes at the bottom a link to

|

||||

**Edit this page**. Clicking on that link is the recommended way to improve our documentation, as it

|

||||

leads you directly to GitHub's code editor.

|

||||

Make your suggested changes, and use the ***Preview changes*** button to ensure your Markdown syntax works as expected.

|

||||

|

||||

Under the **Commit changes** header, write descriptive title for your requested change. Click the **Commit changes**

|

||||

button to initiate your pull request (PR).

|

||||

|

||||

Jump down to our instructions on [PRs](#making-a-pull-request) for your next steps.

|

||||

|

||||

**Note**: If you wish to contribute documentation that is more tailored from your specific infrastructure

|

||||

monitoring/troubleshooting experience, please consider submitting a blog post about your experience. Check out our [blog](https://github.com/netdata/blog#readme) repo! Any blog submissions that have

|

||||

### Create a new document

|

||||

|

||||

You can create a pull request to add a completely new markdown document in any of our public repositories.

|

||||

After the Github pull request is merged, our documentation team will decide where in the documentation hierarchy to publish

|

||||

that document.

|

||||

|

||||

If you wish to contribute documentation that is tailored to your specific infrastructure

|

||||

monitoring/troubleshooting experience, please consider submitting a blog post about your experience.

|

||||

Check out our [blog](https://github.com/netdata/blog#readme) repo! Any blog submissions that have

|

||||

widespread or universal application will be integrated into our permanent documentation.

|

||||

|

||||

### Edit locally

|

||||

#### Before you get started

|

||||

|

||||

Editing documentation locally is the preferred method for complex changes that span multiple documents or change the

|

||||

documentation's style or structure.

|

||||

Anyone interested in contributing significantly to documentation should first read the

|

||||

[Netdata style guide](https://github.com/netdata/netdata/blob/master/docs/contributing/style-guide.md)

|

||||

and the [Netdata Community Code of Conduct](https://github.com/netdata/.github/blob/main/CODE_OF_CONDUCT.md).

|

||||

|

||||

Create a fork of the Netdata Agent repository by visit the [Netdata repository](https://github.com/netdata/netdata) and

|

||||

clicking on the **Fork** button.

|

||||

Netdata's documentation uses Markdown syntax. If you're not familiar with Markdown, read

|

||||

the [Mastering Markdown](https://guides.github.com/features/mastering-markdown/) guide from GitHub for the basics on

|

||||

creating paragraphs, styled text, lists, tables, and more.

|

||||

|

||||

GitHub will ask you where you want to clone the repository. When finished, you end up at the index of your forked

|

||||

Netdata Agent repository. Clone your fork to your local machine:

|

||||

#### Edit locally

|

||||

|

||||

```bash

|

||||

git clone https://github.com/YOUR-GITHUB-USERNAME/netdata.git

|

||||

```

|

||||

|

||||

Create a new branch using `git checkout -b BRANCH-NAME`. Use your favorite text editor to make your changes, keeping

|

||||

the [Netdata style guide](https://github.com/netdata/netdata/blob/master/docs/contributing/style-guide.md) in mind. Add, commit, and push changes to your fork. When you're

|

||||

finished, visit the [Netdata Agent Pull requests](https://github.com/netdata/netdata/pulls) to create a new pull request

|

||||

based on the changes you made in the new branch of your fork.

|

||||

Editing documentation locally is the preferred method for completely new documents, or complex changes that span multiple

|

||||

documents. Clone the repository where you wish to make your changes, work on a new branch and create a pull request

|

||||

with that branch.

|

||||

|

||||

### Making a pull request

|

||||

|

||||

Pull requests (PRs) should be concise and informative. See our [PR guidelines](/contribute/handbook#pr-guidelines) for

|

||||

Pull requests (PRs) should be concise and informative. See our

|

||||

[PR guidelines](https://github.com/netdata/.github/blob/main/CONTRIBUTING.md#pr-guidelines) for

|

||||

specifics.

|

||||

|

||||

- The title must follow the [imperative mood](https://en.wikipedia.org/wiki/Imperative_mood) and be no more than ~50

|

||||

characters.

|

||||

- The description should explain what was changed and why. Verify that you tested any code or processes that you are

|

||||

trying to change.

|

||||

|

||||

The Netdata team will review your PR and assesses it for correctness, conciseness, and overall quality. We may point to

|

||||

specific sections and ask for additional information or other fixes.

|

||||

|

||||

After merging your PR, the Netdata team rebuilds the [documentation site](https://learn.netdata.cloud) to publish the

|

||||

changed documentation.

|

||||

|

||||

## Styling guide

|

||||

|

||||

The *Netdata style guide* establishes editorial guidelines for any writing produced by the Netdata team or the Netdata

|

||||

community, including documentation, articles, in-product UX copy, and more. Both internal Netdata teams and external

|

||||

contributors to any of Netdata's open-source projects should reference and adhere to this style guide as much as

|

||||

possible.

|

||||

|

||||

Netdata's writing should **empower** and **educate**. You want to help people understand Netdata's value, encourage them

|

||||

to learn more, and ultimately use Netdata's products to democratize monitoring in their organizations. To achieve these

|

||||

goals, your writing should be:

|

||||

|

||||

- **Clear**. Use simple words and sentences. Use strong, direct, and active language that encourages readers to action.

|

||||

- **Concise**. Provide solutions and answers as quickly as possible. Give users the information they need right now,

|

||||

along with opportunities to learn more.

|

||||

- **Universal**. Think of yourself as a guide giving a tour of Netdata's products, features, and capabilities to a

|

||||

diverse group of users. Write to reach the widest possible audience.

|

||||

|

||||

You can achieve these goals by reading and adhering to the principles outlined below.

|

||||

|

||||

If you're not familiar with Markdown, read

|

||||

the [Mastering Markdown](https://guides.github.com/features/mastering-markdown/) guide from GitHub for the basics on

|

||||

creating paragraphs, styled text, lists, tables, and more.

|

||||

|

||||

The following sections describe situations in which a specific syntax is required.

|

||||

|

||||

#### Syntax standards (`remark-lint`)

|

||||

|

||||

The Netdata team uses [`remark-lint`](https://github.com/remarkjs/remark-lint) for Markdown code styling.

|

||||

|

||||

- Use a maximum of 120 characters per line.

|

||||

- Begin headings with hashes, such as `# H1 heading`, `## H2 heading`, and so on.

|

||||

- Use `_` for italics/emphasis.

|

||||

- Use `**` for bold.

|

||||

- Use dashes `-` to begin an unordered list, and put a single space after the dash.

|

||||

- Tables should be padded so that pipes line up vertically with added whitespace.

|

||||

|

||||

If you want to see all the settings, open the

|

||||

[`remarkrc.js`](https://github.com/netdata/netdata/blob/master/.remarkrc.js) file in the `netdata/netdata` repository.

|

||||

|

||||

#### MDX and markdown

|

||||

|

||||

While writing in Docusaurus, you might want to take leverage of it's features that are supported in MDX formatted files.

|

||||

One of those that we use is [Tabs](https://docusaurus.io/docs/next/markdown-features/tabs). They use an HTML syntax,

|

||||

which requires some changes in the way we write markdown inside them.

|

||||

|

||||

In detail:

|

||||

|

||||

Due to a bug with docusaurus, we prefer to use `<h1>heading</h1> instead of # H1` so that docusaurus doesn't render the

|

||||

contents of all Tabs on the right hand side, while not being able to navigate

|

||||

them [relative link](https://github.com/facebook/docusaurus/issues/7008).

|

||||

|

||||

You can use markdown syntax for every other styling you want to do except Admonitions:

|

||||

For admonitions, follow [this](https://docusaurus.io/docs/markdown-features/admonitions#usage-in-jsx) guide to use

|

||||

admonitions inside JSX. While writing in JSX, all the markdown stylings have to be in HTML format to be rendered

|

||||

properly.

|

||||

|

||||

#### Admonitions

|

||||

|

||||

Use admonitions cautiously. Admonitions may draw user's attention, to that end we advise you to use them only for side

|

||||

content/info, without significantly interrupting the document flow.

|

||||

|

||||

You can find the supported admonitions in the docusaurus's [documentation](https://docusaurus.io/docs/markdown-features/admonitions).

|

||||

|

||||

#### Images

|

||||

|

||||

Don't rely on images to convey features, ideas, or instructions. Accompany every image with descriptive alt text.

|

||||

|

||||

In Markdown, use the standard image syntax, ``, and place the alt text between the

|

||||

brackets `[]`. Here's an example using our logo:

|

||||

|

||||

```markdown

|

||||

|

||||

```

|

||||

|

||||

Reference in-product text, code samples, and terminal output with actual text content, not screen captures or other

|

||||

images. Place the text in an appropriate element, such as a blockquote or code block, so all users can parse the

|

||||

information.

|

||||

|

||||

#### Syntax highlighting

|

||||

|

||||

Our documentation site at [learn.netdata.cloud](https://learn.netdata.cloud) uses

|

||||

[Prism](https://v2.docusaurus.io/docs/markdown-features#syntax-highlighting) for syntax highlighting. Netdata can use

|

||||

any of

|

||||

the [supported languages by prism-react-renderer](https://github.com/FormidableLabs/prism-react-renderer/blob/master/src/vendor/prism/includeLangs.js)

|

||||

.

|

||||

|

||||

If no language is specified, Prism tries to guess the language based on its content.

|

||||

|

||||

Include the language directly after the three backticks (```` ``` ````) that start the code block. For highlighting C

|

||||

code, for example:

|

||||

|

||||

````c

|

||||

```c

|

||||

inline char *health_stock_config_dir(void) {

|

||||

char buffer[FILENAME_MAX + 1];

|

||||

snprintfz(buffer, FILENAME_MAX, "%s/health.d", netdata_configured_stock_config_dir);

|

||||

return config_get(CONFIG_SECTION_DIRECTORIES, "stock health config", buffer);

|

||||

}

|

||||

```

|

||||

````

|

||||

|

||||

And the prettified result:

|

||||

|

||||

```c

|

||||

inline char *health_stock_config_dir(void) {

|

||||

char buffer[FILENAME_MAX + 1];

|

||||

snprintfz(buffer, FILENAME_MAX, "%s/health.d", netdata_configured_stock_config_dir);

|

||||

return config_get(CONFIG_SECTION_DIRECTORIES, "stock health config", buffer);

|

||||

}

|

||||

```

|

||||

|

||||

Prism also supports titles and line highlighting. See

|

||||

the [Docusaurus documentation](https://v2.docusaurus.io/docs/markdown-features#code-blocks) for more information.

|

||||

|

||||

## Language, grammar, and mechanics

|

||||

|

||||

#### Voice and tone

|

||||

|

||||

One way we write empowering, educational content is by using a consistent voice and an appropriate tone.

|

||||

|

||||

*Voice* is like your personality, which doesn't really change day to day.

|

||||

|

||||

*Tone* is how you express your personality. Your expression changes based on your attitude or mood, or based on who

|

||||

you're around. In writing, your reflect tone in your word choice, punctuation, sentence structure, or even the use of

|

||||

emoji.

|

||||

|

||||

The same idea about voice and tone applies to organizations, too. Our voice shouldn't change much between two pieces of

|

||||

content, no matter who wrote each, but the tone might be quite different based on who we think is reading.

|

||||

|

||||

For example, a [blog post](https://www.netdata.cloud/blog/) and a [press release](https://www.netdata.cloud/news/)

|

||||

should have a similar voice, despite most often being written by different people. However, blog posts are relaxed and

|

||||

witty, while press releases are focused and academic. You won't see any emoji in a press release.

|

||||

|

||||

##### Voice

|

||||

|

||||

Netdata's voice is authentic, passionate, playful, and respectful.

|

||||

|

||||

- **Authentic** writing is honest and fact-driven. Focus on Netdata's strength while accurately communicating what

|

||||

Netdata can and cannot do, and emphasize technical accuracy over hard sells and marketing jargon.

|

||||

- **Passionate** writing is strong and direct. Be a champion for the product or feature you're writing about, and let

|

||||

your unique personality and writing style shine.

|

||||

- **Playful** writing is friendly, thoughtful, and engaging. Don't take yourself too seriously, as long as it's not at

|

||||

the expense of Netdata or any of its users.

|

||||

- **Respectful** writing treats people the way you want to be treated. Prioritize giving solutions and answers as

|

||||

quickly as possible.

|

||||

|

||||

##### Tone

|

||||

|

||||

Netdata's tone is fun and playful, but clarity and conciseness comes first. We also tend to be informal, and aren't

|

||||

afraid of a playful joke or two.

|

||||

|

||||

While we have general standards for voice and tone, we do want every individual's unique writing style to reflect in

|

||||

published content.

|

||||

|

||||

#### Universal communication

|

||||

|

||||

Netdata is a global company in every sense, with employees, contributors, and users from around the world. We strive to

|

||||

communicate in a way that is clear and easily understood by everyone.

|

||||

|

||||

Here are some guidelines, pointers, and questions to be aware of as you write to ensure your writing is universal. Some

|

||||

of these are expanded into individual sections in

|

||||

the [language, grammar, and mechanics](#language-grammar-and-mechanics) section below.

|

||||

|

||||

- Would this language make sense to someone who doesn't work here?

|

||||

- Could someone quickly scan this document and understand the material?

|

||||

- Create an information hierarchy with key information presented first and clearly called out to improve scannability.

|

||||

- Avoid directional language like "sidebar on the right of the page" or "header at the top of the page" since

|

||||

presentation elements may adapt for devices.

|

||||

- Use descriptive links rather than "click here" or "learn more".

|

||||

- Include alt text for images and image links.

|

||||

- Ensure any information contained within a graphic element is also available as plain text.

|

||||

- Avoid idioms that may not be familiar to the user or that may not make sense when translated.

|

||||

- Avoid local, cultural, or historical references that may be unfamiliar to users.

|

||||

- Prioritize active, direct language.

|

||||

- Avoid referring to someone's age unless it is directly relevant; likewise, avoid referring to people with age-related

|

||||

descriptors like "young" or "elderly."

|

||||